Scalable WCF Applications Using Distributed Caching

Author: Iqbal Khan

After the explosion of Web applications to accommodate high-traffic usage, the next big wave has become service-oriented architecture (SOA). SOA has changed the application development and integration landscape. It is destined to become a standard way for developing extremely scalable applications, and cloud computing platforms like Windows Azure and Windows Communication Foundation (WCF) represent a giant leap in moving SOA toward achieving this goal. SOA is primarily intended to achieve scalability and to sustain as much load as is thrown at it to achieve improved agility and enhanced productivity.

Although, a true SOA application should scale easily as far as application architecture is concerned. However, there are many performance bottlenecks that need to be addressed to achieve true scalability.

- Application data is by far the heaviest data usage in a WCF service, and its storage and access is a major scalability bottleneck owing to the latency that comes as a byproduct of relational data access.

- Environments with highly distributed and disparate data sources pose the biggest challenge towards achieving the performance goals of SOA. Though the app-tier can scale nicely, but one of the main concerns that remain in a service-oriented architecture (SOA) is the performance of the participating data services.

- The data tier can't scale in a linear fashion in terms of transaction handling capacity, thus potentially causing considerable delays in the overall response time.

- Additionally, an SOA service depends on other services, which may not be available locally so a WAN call to another service may become another bottleneck.

- Further, if the SOA is being used in the implementation of a data virtualization layer, the performance of data services is of key importance, in which case the performance of the application is directly proportional to the time it takes to fetch the underlying data. The data services might be accessing both relational and non-relational data, often distributed across several geographically distributed data centers, which might cause response latency, thus hampering the overall application performance.

Distributed Cache (NCache) for Service Scalability

To reduce the response latency of the entire solution, an all-encompassing approach is to utilize a high-performance caching system to use in tandem with the data services or in the data virtualization layer. SOA services deal with two types of data. One is service-state data and the other is service-result data that resides in the database. Both cause scalability bottlenecks.

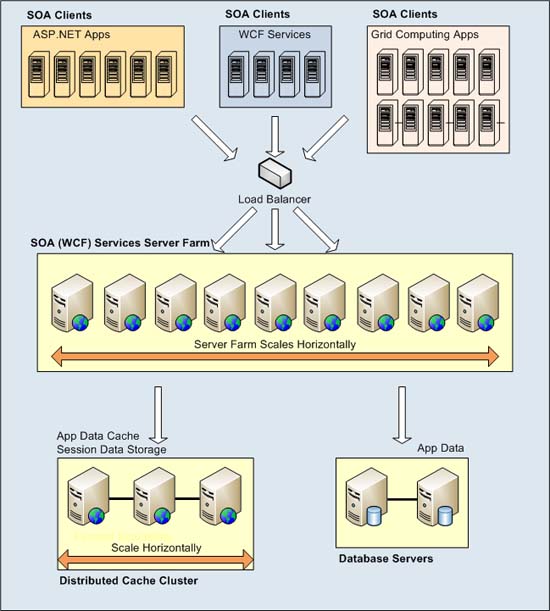

Caching can play a very important role in improving the speed to access both service state and application data, while enabling to scale the services out at the same time. Caching achieves this through minimizing the amount of traffic and latency between the services which use the cache and underlying data providers. Figure 1 depicts the use of NCache distributed caching to achieve this.

Caching Application Data

A distributed cache like NCache is used to cache only a subset of the data that is in the database based on what the WCF service needs in a small window of a few hours. A distributed cache can give an SOA application a significant scalability boost because:

- Distributed Cache can scale out as a result of the architecture it employs.

- It keeps things distributed across multiple servers—and still gives your SOA application one logical view so you think it's just one cache. But the cache actually lives on multiple servers and that's what allows the cache to really scale.

- If you use distributed caching like NCache in between the service layer and the database, you'll improve performance and scalability of the service layer dramatically as it shall save a large number of time consuming database trips.

The basic logic to implement is that, before going to the database, check to see if the cache already has the data. If it does, take it from the cache. Otherwise, go to the database to fetch the data and put it in the cache for next time. Figure 2 shows an example.

using System.ServiceModel;

using Alachisoft.NCache.Web.Caching;

namespace MyWcfServiceLibrary {

[ServiceBehavior]

public class EmployeeService : IEmployeeService {

static string _sCacheName = "myServiceCache";

static Cache _sCache =

NCache.InitializeCache(_sCacheName);

public Employee Load(string employeeId) {

// Create a key to lookup in the cache.

// The key for will be like "Employees:PK:1000".

string key = "Employee:EmployeeId:" + employeeId;

Employee employee = (Employee)_sCache[key];

if (employee == null) {// item not found in the cache.

// Therefore, load from database.

LoadEmployeeFromDb(employee);

// Now, add to cache for future reference.

_sCache.Insert(key, employee, null,

Cache.NoAbsoluteExpiration,

Cache.NoSlidingExpiration,

CacheItemPriority.Default);

}

// Return a copy of the object since ASP.NET Cache is InProc.

return employee;

}

}

}

Important Caching Features

A caching design for a service must consider issues such as: how frequently the underlying data changes, how frequently the cached data needs to be updated, whether the data is user-specific or application-wide, what mechanism to use to indicate that the cache needs updating, tolerance level of application for dirty data etc. Thus a caching solution must have the requisite capabilities to sort all such requirements.

Some of the key features in scalable management of data through data services using distributed caching are briefed below.

Expiring Cached Data

Expirations let you specify how long data should stay in the cache before the cache automatically removes it. There are two types of expirations you can specify in NCache: absolute-time expiration and sliding- or idle-time expiration.

If the data in your cache also exists in the database, you know that this data can be changed in the database by other users or applications that may not have access to your cache. When that happens, the data in your cache becomes stale, which you do not want. If you're able to make a guess as to how long you think it's safe for this data to be kept in the cache, you can specify absolute-time expiration. Moreover, sliding expiration can come in really handy for session based applications where you store sessions in distributed caching.

Synchronizing the Cache with a Database

The need for database synchronization arises because the database is really being shared across multiple applications, and not all of those applications have access to your cache. If your WCF service application is the only one updating the database and it can also easily update the cache, you probably don't need the database-synchronization capability.

But, in a real-life environment, that's not always the case. Third-party applications update data in the database and your cache becomes inconsistent with the database. Synchronizing your cache with the database ensures that the cache is always aware of these database changes and can update itself accordingly.

Synchronizing with the database usually means invalidating the related cached item from the cache so the next time your application needs it, it will have to fetch it from the database because the cache doesn't have it.

Managing Data Relationships in the Cache

Most data comes from a relational database, and even if it's not coming from a relational database, it's relational in nature. For example, you're trying to cache a customer object and an order object and both objects are related. A customer can have multiple orders.

When you have these relationships, you need to be able to handle them in a cache. That means the cache should know about the relationship between a customer and an order. If you update or remove the customer from the cache, you may want the cache to automatically remove the order object from the cache. This helps maintain data integrity in many situations.

If a cache can't keep track of these relationships, you'll have to do it yourself—and that makes your application more cumbersome and complex.

Conclusion

Thus, an SOA application can't scale effectively when the data it uses is kept in a storage that is not scalable for frequent transactions. This is where distributed caching like NCache really helps. In an enterprise environment, SOA based application environment can't truly scale without employing a true distributed caching infrastructure. The traditional database servers are also improving but without distributed caching, service applications can't meet the exploding demand for scalability in today's complex applications environment.

Author:Iqbal Khan works for Alachisoft , a leading software company providing .NET and Java distributed caching, O/R Mapping and SharePoint Storage Optimization solutions. You can reach him at iqbal@alachisoft.com.