Distributed Persistent Caches

Distributed Persistent Caches in NCache provide a robust, key-value storage solution that is ultra-low latency. By maintaining a real-time copy of cache data in a persistence store, NCache ensures high data availability and fault tolerance, allowing for rapid data recovery even after catastrophic cluster failures or planned restarts. This architectural approach is specifically designed for Partitioned Topologies and Local (OutProc) Caches, ensuring that critical application data—including streams, data structures, and dynamic indexes—remains permanent and recoverable.

Note

Distributed cache with persistence supports only Partitioned Topologies and Local (OutProc) Caches.

You can create a distributed cache with persistence using a NoSQL Document Store as a persistence store for data backup. The cache will persist all write APIs, meta-info, streams, data structures, and Dynamic Indexes to the backend store.

Note

Pub/Sub messages are not persisted, but NCache supports Pub/Sub API and enables you to create a dedicated Pub/Sub Messaging Cache.

Please note that the data removed from the cache due to any data invalidation or explicit removal will also get removed from the underlying persistence store. Adding items with Expiration and Key Dependency is supported. However, we do not recommend this approach since the data stored inside such a persistent cache is permanent data. Meanwhile, data with dependency on the database and dependency on external sources is not set to persist.

Note

Similar to a volatile distributed cache, a backing source is supported for a Distributed Cache with Persistence.

Persistent Cache: Why Persist Data

NCache stores data in RAM for faster access. Since cache memory is volatile, data loss is inevitable in the following scenarios:

- Node down in Partitioned topology.

- More than one node down simultaneously in Partition-Replica topology.

- Cluster down either due to maintenance reasons or catastrophic failure.

With a persistent cache, you can achieve the following:

High data availability: In case of memory failure due to any of the reasons mentioned previously, NCache quickly recovers the data by loading it from the underlying persistence store. The cache becomes operational without affecting the client operations even after a catastrophic failure.

Fault tolerance: Maintaining a real-time copy of cache data minimizes downtime and provides fault tolerance when single/multiple nodes leave the cluster.

Important

For persistence, the key length shouldn't exceed 1023 bytes.

Persistent Cache: How it Works

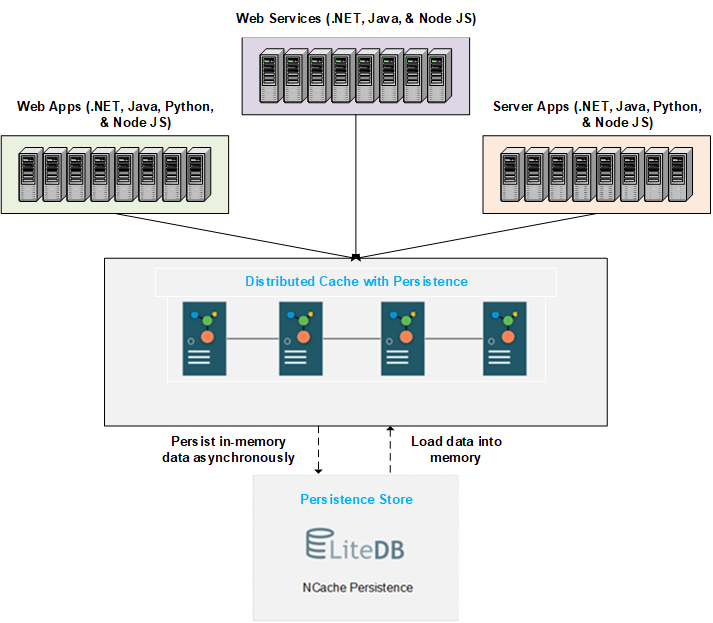

Here we describe the working and behavior of a distributed cache with persistence. The diagram below shows the basic architecture. You can create a distributed cache with persistence with a persistence store at the backend. The store is centralized and accessible to all the nodes. NCache writes data added to the cache to the backend store at maintainable time intervals. On cache restart or node leave/join, the persisted data is loaded into the memory as much as the cache servers can hold.

We discuss the detailed process of data persistence and loading below.

Data Persistence in a Persistent Cache

Once you create a distributed cache with persistence, all the write operations are first performed in memory and then persisted to the backend store. Since NCache has a distributed architecture, each server node persists its data while all server nodes can access the store. Moreover, since the data distribution is bucket-based due to partitions, the data is persisted likewise.

Async Persistence in a Persistent Cache

NCache persists data in memory to the persistence store through async persistence. Here we explain how it works. Each partition has a persistence queue to record client operations performed. Any write operations performed by the client enqueue once successful. Since persistence works asynchronously, the client does not wait after enqueueing the operation. The queued operations are checked periodically at a configurable persistence-interval and eventually replicated to the backend store by a persistence thread. Each queue is written independently.

Note

The default value of persistence-interval is 1 sec and it is configurable in the NCache Management Center.

Normally, the batch is applied after persistence-interval, but if the persistence batch fails consecutively, then after persistence-retries, batch interval is shifted to persistence-interval-longer. Once it is successful, the batch interval will reset to persistence-interval.

Important

The performance of the cache does not degrade since client operations occur as usual due to async replication.

If the cache cannot persist data inside the persistence queue due to any problem, it will keep taking write operations until it is full. If the queued operations do not persist, information on the failed buckets logs into the cache logs.

Note

The Partition-Replica topology recovers from queue failure through the replica's queue. However, data loss is inevitable if a node and its replica are down simultaneously.

Data Loading in a Persistent Cache

Once the data is in the store, the cache automatically reloads the persisted data into RAM on cache restart. The persistent store must be available to cache nodes all the time. If they can not access the store, the cache will not start. Data loading occurs in a distributed manner. Since data storage is a bucket-based procedure, each node can access the centralized store to load its assigned buckets based on the data distribution map. Moreover, if you have configured indices for querying data in the cache, the query indices regenerate on cache restart.

Important

The persistence store should be available to all cache nodes at all times.

Operation Behavior During Data Loading

Data loading and persistence processes run simultaneously. Meanwhile, the key-based fetches are served from the cache if the requested data loads. If it is not in the cache, such operations are served directly from the store through lazy loading. In that case, the performance of the Get operation will be affected.

Please note that the non-key-based or criteria-based search operations, such as GetGroupKeys, GetKeysByTag, and SQL queries, will not be served until data completely loads from the store into the memory.

Warning

If a non-key-based search operation occurs during data loading and the requested data does not completely load, the application will throw an exception stating data not completely loaded from persistence store.

Data Loading Scenarios

Data loads from the persistence store in the following scenarios:

On Cache Start: On cache start, the coordinator node loads all the buckets. As soon as other nodes join the cluster, bucket distribution is updated. Each node looks for its assigned buckets in the local environment. The buckets loaded in the cache are pulled through state transfer. If the buckets assigned to a node do not load completely, then those buckets are loaded directly from the store by that node. Each node can access the store to load its assigned buckets if present in the cache.

When a distributed cache with persistence starts for the first time, it can be populated by configuring Cache Startup Loader since the persistence store has no data at that time. Once the store populates, the data always loads from the store on cache start, even if you have configured the Cache Loader. However, if you need to add more data periodically, you can use Cache Refresher. Cache refresher runs at periodic intervals irrespective of whether the cache and the store already have the data.

On Node Join: When a new node joins the cluster, it gets the assigned buckets from the existing cluster nodes through state transfer if they are already loaded. If the assigned buckets do not completely load in the cache, they are loaded from the store by the new node.

On Node Leave: Data is loaded from the store to avoid data loss when a node/nodes leave the cluster. The loading behavior on node leave varies for different topologies.

Partitioned Topology: When a node leaves, its buckets are distributed among the existing cluster nodes and loaded from the persistence store by the new owners.

Partition-Replica Topology: Partition-Replica tolerates node failure up to one level by recovering lost buckets through state transfer from the replica. However, when a node and its replica are down simultaneously, the lost data is still recoverable from the backup store.

Important

Cache should have the capacity to accommodate the data in case of node down/leave.

Capacity Management for a Distributed Cache with Persistence

A persistent cache can recover from data loss on node leave or down only when the cache has enough cushion to accommodate the data of the departed node/nodes. If the cache cannot accommodate all data on the persistence store due to being full or any other reason, the add or update operations will begin failing. Meanwhile, some of the buckets will not have complete data. These incomplete buckets reload from the store in two cases:

- A new node joins the cluster.

- The cache size increases through hot apply.

Note

If a cache is full but is 100% in sync with the persistence store, only new additions are blocked. All other operations may occur on the cache without any problems.

When the cache is full with partial data in memory, the cache may not serve non-key-based or criteria-based operations (such as GetGroupKeys, GetKeysByTag, and SQL queries). On the other hand, the key-based fetches will always be served either through the cache or through the store. Specifically, the cache will try to lazy-load all key-based gets for the incomplete buckets in case of cache misses.

Warning

If a criteria-based search operation takes place on cache full and the requested data is not in the cache, an exception will be thrown Operation cannot occur because cache does not have all data in-memory.

Capacity Planning For Cache Full

To avoid the issues raised on cache full, you need to plan the capacity of your persistent cache before you start using it. When doing the capacity planning for a distributed cache with persistence, we recommend that you plan the size of the cache per node so that if a node goes down, the remaining nodes can accommodate all the data of the lost node.

Cache Size Expansion On Cache Full

Important

NCache tries to ensure high data availability on single node leave in a Partition-Replica-based distributed cache with persistence through size expansion. However, high data availability is not promised or guaranteed.

In the case of Partition-Replica, if a node leaves a cluster, the remaining nodes in the cluster accommodate the data owned by the leaving node. However, there is a possibility that the data from the node leaving may not have space due to sizing issues. NCache supports automatic cache size expansion when a node leaves the Partition-Replica-based distributed cache with persistence. The expansion mode is supported only for single node down. The purpose is to avoid partial data in the cache and serve the criteria-based operations on the full cache.

The expansion process occurs internally. The expansion mode triggers when a single node leaves the cluster while running nodes are either equal to or greater than the configured nodes. Expanded size is calculated based on the configured nodes or running nodes in the cluster (whichever is higher). When the cache is in expanded mode, each node in the cluster grows its size automatically to accommodate the data received through state transfer on node leave.

Important

The expansion occurs only when the number of remaining nodes (after node down) is max of {configured nodes/running nodes}-1. Expanded size is calculated based on the configured nodes or running nodes in the cluster (whichever is higher).

In the expanded mode, both key and criteria-based operations are served. However, it will still block add operations if the cache size has once crossed the configured cache size.

Cache comes out from the expanded mode when either a new node joins the cluster or cache size increases through hot apply. Then the distribution map is updated, and state transfer is triggered. Once state transfer is complete, each node comes out of the expanded mode, and the cache size reduces to the configured cache size.

Note

An entry is logged in both cache logs and event logs when the cache enters and exists in the expanded mode.

Inaccessibility Behaviors

Data loads from the persistence store at cache startup - so the store must be available to cache nodes consistently. Here, we discuss the behaviors when the persistence store is inaccessible;

- At the time of cache creation: The connection to the store is verified at the time of cache creation. If it is inaccessible, you won't be able to create the cache and will receive an error notification. Similarly, a cache will not start if the store is unavailable to any cluster nodes.

Warning

The cache will not start until the persistence store is accessible to all the server nodes at the time of cache creation.

At the time of data loading: The store may become inaccessible during data loading due to network failure. In that case, the cache will retry to load the remaining data buckets in the loading state. Meanwhile, the non-key-based search operations will fail. You can configure data loading retries in the service config file.

At the time of running cache: The store can become inaccessible for a running cache due to network failure either for a brief period or an infinite time. On such a connection loss, the cache will keep accepting and enqueueing write operations. Meanwhile, NCache will keep trying to persist the queued operations on batch intervals until the connection to the persistence store is re-established.

If you lose the connection infinitely, the write operations occur until the cache memory is full. Once the cache is full, subsequent write operations will fail. However, key-based fetch operations will be served as discussed before.

Persistent Cache Creation and Monitoring

Note

Only JSON serialized cache is supported for a distributed cache with persistence.

You can create a distributed cache with persistence by specifying a new store or an existing one (created using NCache) either through the NCache Management Center or through PowerShell tool.

NCache provides different performance counters for monitoring the statistics of a distributed cache with persistence. Moreover, you can monitor a distributed cache with persistence through the NCache Monitor, PowerShell, and PerfMon tool.

See Also

Create a Distributed Cache with Persistence

Getting Started with Distributed Cache with Persistence