Using Read-through Cache & Write-through Cache

With the rapid growth of high-transaction web apps, service-oriented architecture (SOA), grid computing, and other server applications, traditional data storage often struggles to keep up. The reason is that data storage systems cannot scale out like application architectures that can scale out by adding more servers. This article explains caching strategies used in distributed systems, with examples from NCache.

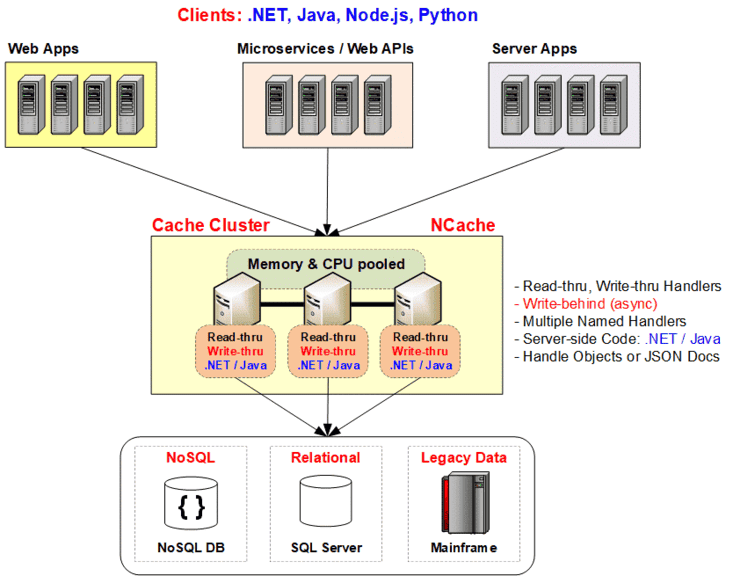

In these situations, in-memory distributed cache offers an excellent solution to data storage bottlenecks. It spans multiple servers in a cluster to pool their memory together and keep the cache synchronized across all nodes. This cluster can scale out indefinitely, just like the application servers. This reduces the load on the underlying data storage, eliminating it as a scalability bottleneck.

Key Takeaways

- Read-Through Cache feature of NCache automatically fetches data from the database, upon the application asking for it, if it does not exist in the cache, simplifying application code.

- Write-Through Cache updates the database synchronously, ensuring data consistency between the cache and the database. This also simplifies the application code. This feature is provided by NCache.

- Write-Behind Cache feature of NCache updates the cache immediately, queues up database updates, and then applies them to the database later asynchronously in the background. This significantly improves application write performance because the application does not have to wait for the slow database update.

- Versus Cache-Aside: Read-through/write-through strategies treat the cache as the primary data store, abstracting the database interaction away from the application. With the cache-aside pattern, the application directly talks to the database and caches data it fetches.

Difference between Cache-Aside and Read-Through/Write-Through

There are two main ways people use a distributed cache:

- Cache-aside: This is where the application is responsible for reading and writing from the database, and the cache doesn't interact with the database at all. The cache is "kept aside" as a faster and more scalable in-memory data store. The application checks the cache before reading anything from the database and updates the cache after making any updates to the database. This way, the application ensures that the cache is kept synchronized with the database.

- Read-through / Write-through: This is where the application treats cache as the main data store and reads data from it and writes data to it. The cache is responsible for reading and writing this data to the database, thereby relieving the application of this responsibility.

Comparison: Cache-Aside vs. Read-Through vs. Write-Behind

| Feature | Cache-Aside | Read-Through | Write-Through | Write-Behind |

|---|---|---|---|---|

| Primary Responsibility | Application manages the database interaction | Cache manages DB reads | Cache manages DB writes (Sync) | Cache manages DB writes (Async) |

| Code Complexity | High (DB logic in app) | Low (DB logic in cache provider) | Low (DB logic in cache provider) | Low (DB logic in cache provider) |

| Read Scalability | Moderate (Risk of "Thundering Herd") | High (Requests coalesce on cache) | N/A | N/A |

| Write Performance | Slower (App waits for DB) | N/A | Slower (App waits for DB) | Fastest (App does not wait for DB) |

| Data Consistency | High | High | High | Eventual (Brief delay before DB update) |

Benefits of Read-through Cache & Write-through Cache over Cache-aside

Cache-aside is a very powerful technique and allows you to issue complex database queries involving joins and nested queries, and manipulate data any way you want. Despite that, Read-through / Write-through has various advantages over cache-aside as mentioned below:

- Simplify application code: In the cache-aside approach, your application code continues to have complexity and direct dependence on the database, and even code duplication if multiple applications are dealing with the same data. Read-through / Write-through moves some of the data access code from your applications to the caching tier. This dramatically simplifies your applications and abstracts away the database even more clearly.

- Better read scalability with Read-through Cache: There are many situations where a cache-item expires, and multiple parallel user threads end up hitting the database. Multiplying this by millions of cached items and thousands of parallel user requests, the load on the database becomes noticeably higher. But Read-through keeps cache item in the cache while it is fetching the latest copy of it from the database. It then updates the cache item. The result is that the application never goes to the database for these cache items and the database load is kept to a minimum.

- Better write performance with Write-behind: In cache-aside, the application updates the database directly in a synchronous manner. Whereas Write-behind caching lets your application quickly update the cache and return. Then it lets the cache update the database in the background.

- Better database scalability with Write-behind: With Write-behind, you can specify throttling limits so the database writes are not performed as fast as the cache updates, and therefore the pressure on the database is not much. Additionally, you can schedule the database writes to occur during off-peak hours, again to minimize pressure.

- Auto-refresh cache on expiration: Read-through allows the cache to automatically reload an object from the database when it expires. This means that your application does not have to hit the database in peak hours because the latest data is always in the cache.

- Auto-refresh cache on database changes: Read-through cache automatically reloads an object from the database when its corresponding data changes in the database. This means that the cache is always fresh, and your application does not have to hit the database in peak hours because the latest data is always in the cache.

Read-through / Write-through is not intended to be used for all data access in your application. It is best suited for situations where you're either reading individual rows from the database or reading data that can directly map to an individual cache item. It is also ideal for reference data that is meant to be kept in the cache for frequent reads, even though this data changes periodically.

Implementing a Read-through Cache

A Read-through provider in NCache is a custom class IReadThruProvider in .NET that fetches data from the source (e.g., SQL Server) when not found in cache. NCache calls LoadFromSource automatically. To use it, implement the provider, deploy it using the NCache Management Center or Command Line tool, and enable it in cache settings.

using System.Collections;

using Alachisoft.NCache.Runtime.DatasourceProviders;

public class SampleReadThruProvider : IReadThruProvider

{

public void Init(IDictionary parameters, string cacheId)

{

// Initialize resources if needed

}

// Modern "Expression-bodied member" for conciseness

public ProviderCacheItem LoadFromSource(string key) =>

new(Database.GetData(key));

// Using LINQ instead of a foreach loop for cleaner bulk loading

public IDictionary<string, ProviderCacheItem> LoadFromSource(ICollection<string> keys)

{

return keys.ToDictionary(

key => key,

key => new ProviderCacheItem(Database.GetData(key))

);

}

public ProviderDataTypeItem<IEnumerable> LoadDataTypeFromSource(string key, DistributedDataType dataType)

{

// "Switch Expression" with "Collection Expressions"

return dataType switch

{

DistributedDataType.List => new([Database.GetData(key)]),

DistributedDataType.Dictionary => new(new Dictionary<string, object>

{

[key] = Database.GetData(key)

}),

DistributedDataType.Counter => new(1000),

_ => null! // null-forgiving operator if nullable context is enabled

};

}

public void Dispose()

{

// Clean up resources if needed

}

}Init() performs certain resource allocation tasks like establishing connections to the main data source, whereas Dispose() is meant to reset all such allocations.

Implementing a Write-through Cache

A Write-Through provider in NCache is a custom class that implements IWriteThruProvider to persist cache updates (add, update, remove) directly to the database whenever the cache is updated.

To use it, implement the interface, deploy it using the provider in NCache Management Center, and enable it in the cache configuration.

using System.Collections;

using Alachisoft.NCache.Runtime.DatasourceProviders;

public class SampleWriteThruProvider : IWriteThruProvider

{

public void Init(IDictionary parameters, string cacheId)

{

// Initialize resources if needed

}

public void Dispose()

{

// Clean up resources if needed

}

public OperationResult WriteToDataSource(WriteOperation operation)

{

var product = operation.ProviderItem.GetValue<Product>();

// Standard switch is still best for side-effects (void actions)

switch (operation.OperationType)

{

case WriteOperationType.Add:

// Database.Add(product);

break;

case WriteOperationType.Update:

// Database.Update(product);

break;

case WriteOperationType.Delete:

// Database.Delete(product);

break;

}

// Target-typed "new" infers the class type automatically

return new(operation, OperationResult.Status.Success);

}

// Modern "Expression-bodied member" using LINQ projection

public ICollection<OperationResult> WriteToDataSource(ICollection<WriteOperation> operations) =<

operations.Select(WriteToDataSource).ToList();

// Handling Data Structures with LINQ

public ICollection<OperationResult> WriteToDataSource(ICollection<DataTypeWriteOperation> operations) =>

operations.Select(op =>

new OperationResult(op, OperationResult.Status.Success)

).ToList();

}