Orlando Code Camp 2019

Optimize ASP.NET Core Performance with Distributed Cache

By Iqbal Khan

President & Technology Evangelist

ASP.NET Core is fast becoming popular for developing high traffic web applications. Learn how to optimize ASP.NET Core performance for handling extreme transaction loads without slowing down by using an Open Source .NET Distributed Cache. This talk covers:

- Quick overview of ASP.NET Core performance bottlenecks

- Overview of distributed caching and how it solves performance issues

- Where can you use distributed caching in your application(s)

- Some important distributed cache features

- Hands-on examples using Open Source NCache as the distributed cache

I'm going to go over this topic of how can you optimize ASP.NET core performance and I'm going to use distributed caching as the technique on making those improvements and I'm going to use NCache as the example in this.

ASP.NET Core Popular (High Traffic Apps)

We all know that ASP.NET core is the new .NET core, the clean, the lightweight architecture, the cross-platform and open source and that is becoming a major reason why more and more people are moving to ASP.NET core.

Also, there's a huge legacy ASP.NET user base and especially if you are an ASP.NET MVC application then moving to ASP.NET core is pretty straightforward. If you're not an MVC then, of course, you've got a lot of code you have to write to do. So, all of those are the reasons that I'm sure you're also here because ASP.NET core is the leading .NET choice for doing web applications.

ASP.NET Core Needs Scalability

So, when you have ASP.NET core is being used more and more in high traffic situations. High traffic usually means that you have a customer facing application. You may be an online business, you may be a retail business. There's a host of industries, healthcare, e-government, social media, online betting, gambling, everything that you can think of. Everybody is online these days. So, anybody! When there's a web application ASP.NET core is being used that means ASP.NET core needs to be scalable.

What is Scalability?

What does that mean? Let me just define that, I'm sure you know a lot of it, but just for completeness purposes, I'm just going to define the terminology.

So, scalability means that if you have an application that with five users you've got really good response time, you click it and within a second or two the page comes back. Then, if you can achieve the same response time with five thousand or fifty thousand or five hundred thousand users then your application is scalable. If you cannot then you're not scalable. If you don't have a high performance with five users then you've got to go to some other discussions about how you've written your code. But, I'm assuming that your application is developed with good algorithms, with good approach and it is a high-performance application with five users. So, how do you make it high performance under peak loads?

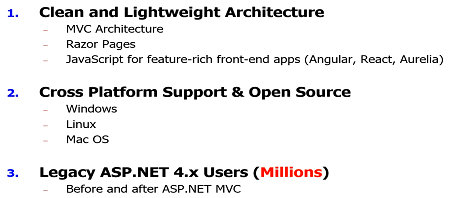

What is Linear Scalability?

So, if you can go from five to five thousand to fifty thousand that is called linear scalability.

And, the way you achieve it, as you know in a load balanced environment, you deploy ASP.NET core application with a load balancer and you add more and more servers. So, as you add more servers, here, that your transaction capacity, your number of requests per second capacity increases in a linear fashion. If you can achieve that then you will be able to achieve that same performance. And, that is the goal of today's talk that we want to be able to achieve this high-performance under peak loads. So, if you don't have a high-performance under peak load, if you don't have a linear application that means that you have some bottlenecks, somewhere. So, as soon as you beyond a certain threshold then it doesn't matter if you add more boxes. Actually, it's going to probably slow things down because there is a bottleneck somewhere that is preventing your application. So, you definitely don't want to be a nonlinear scalability.

Which Apps Needs Scalability?

Just to recap, what types of applications need to be scalable?

Obviously, ASP.NET core which is what we are talking about. You might also have Web Services that means ASP.NET apps are the web apps that means their users are humans. Web services are again web apps. Their users are other apps. Microservices is a relatively new concept that is also for server-side applications. Of course, that involves re-architecting your entire application. I'm not going to go into how you do that. I'm just mentioning the types application so that you can map whatever you guys are doing or your company is doing would map into this or not. And finally, a lot of just general server applications. These server applications may be doing batch processing. For example, if you're a large corporation, let's say, if you're a bank you may need to do process a lot of things in the background in a batch mode, in a workflow mode and those are the server apps.

The Scalability Problem & Solution

So, all of these different types of applications need to be able to handle scalability that means they need to be able to process all those increasing transaction loads without slowing down. So, there is obviously a scalability problem or else we wouldn't be having this conversation. If everything was fine, the good news is that your application tier is not the problem, your ASP.NET core application is not the problem, it’s the data storage. Whatever data you're touching, whatever, it doesn't matter, that is causing a performance bottleneck. And, that is your relational databases, your mainframe legacy data and a bunch of other data. Interestingly, NoSQL databases are not always the answer because in a lot of situations you're not able to… because NoSQL database asks you to abandon your relational database and move to a new SQL database or NoSQL database which you're not able to do for a variety of technical and non-technical reasons. And, if you're not able to move to a NoSQL database, what good is it? Right?

So, my focus is that you have to solve this problem with relational database in the picture because that's the database that you're going to have to use for, as I said, variety of reasons. And, if you're not able to move away from it then NoSQL databases are not the answer in many situations.

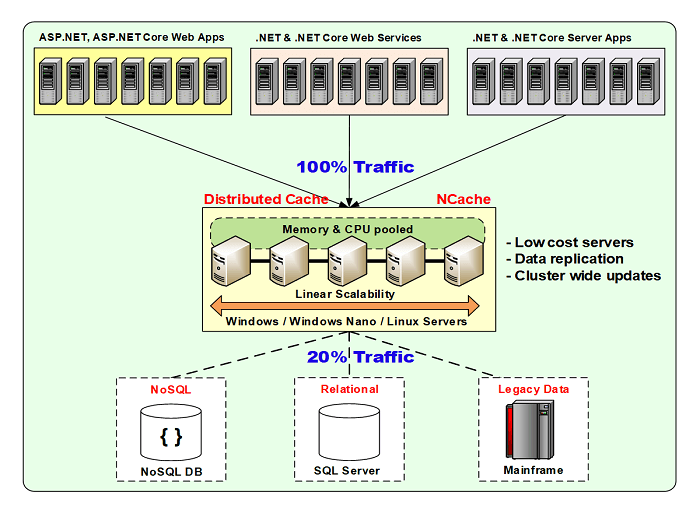

Distributed Cache Deployment

So, you should continue to use a relational database and instead deploy a distributed cache in your application.

Here is what it looks like. So, if you see, you've got the same application tier, you can add more and more servers to it as your transaction load grows. In case of many of these, let's say web apps and web services, they're usually a load balancer up above which is making sure that every web server is getting equal load from the users. In case of server applications, it may not have a lot balancer, it may, it depends on what the nature of the application is. But, the fact is you can add more and more servers and there's only one database here. And, as I said, there may be some NoSQL database for some smaller specialty data but majority of the data is still relational. So, the goal is to put a caching tier in between and a distributed cache is essentially a cluster of two or more servers and these servers are low cost servers. These are not high-end database type of servers and everything is stored in memory.

Why in memory? Because, memory is just so much faster than hard disk. If your application needs to perform more and more, you need to be absolutely clear about this. Hard-disk, whether it's SSD or its HDD, is going to kill you. You have to go to in memory. Everything has to be stored in memory or else you're not going to achieve the performance that you want. And, that is regardless of whether you go to a distributed cache or not but in memory is this is the storage. So, a distributed cache has two or more servers. It forms a cluster and the word cluster means that every server knows about the other server in the cluster, they pooled the resources, the Memory, the CPU and the network card. So, those are the three resources that it pulls together into one logical capacity.

Memory, because every server has RAM so a typical configuration that we have seen our customers used is between 16 to 32 gig of RAM and every one Cache server. its minimum of 16 we recommend, between 16 to 32. And then, instead of going above 32 to, let's say 64 or 128 gig of RAM, in each box which then requires you to increase the CPU capacity because the more memory you have the more garbage collection you have to do. Because .NET uses GC so the more garbage collection means more CPU or else garbage collection becomes the bottleneck. So, it's better to have 16 to 32 range and not bigger and just have more boxes than to have 128 gig of two boxes. So, that's memory is for.

CPU obviously is the second thing. Again, typical configuration is about 8 cores box. Some of the higher end deployments would use about 16 cores but 8 cores are good enough per box. As I said, low cost servers. And, the network card, of course, because whatever data is being sent from here to here is being sent through the network cards. Now on the application tier, you have obviously more application servers than the cache servers. And again, usually what we recommend is about a four to one or five to one ratio. Five application servers to one cache server with a minimum of two cache servers. So, if you have five application servers here, they have five network cards, they're sending data to five to one ratio of network cards.

So, the network card, you've got to have at least one gigabit or 10 gigabit network cards in the cache servers. Other than that, you just need to pull them together and let's say you start with two as the minimum and when you maxed out two, what's going to happen is you sub it to, you'll run your application. Everything is going to run really super-fast or maybe you're doing load testing. Everything's going to run super-fast and the increased load. Suddenly the server side will start to see higher CPU, higher memory consumption and the response time is going to start to slow down. Now that's what's going to happen with the relational database except here you can add a third box and suddenly you get another big relief and now you'll start with again higher throughput and now you can add more and more transactions it's going to start to peak out and then you add a fourth box, you know.

So, that's how this picture continues to increase and this never becomes a bottleneck this, and Never Say Never, but almost never, it becomes a bottleneck because you can add more and more servers. And, as you add more servers here you just add more in this cache in here which you cannot do with the database. Now, I put 8020 load. In reality, it's more like 90% traffic goes to the cache 10% goes to the database because more and more data you're going to just read from the cache. I'll go over some other aspects of it that a cache has to handle but this is basically a picture, this is the reason why you need a distributed cache.

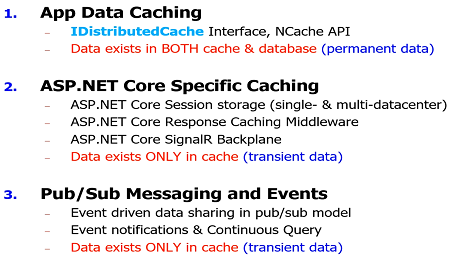

Using Distributed Cache

Okay! So, let's move on. Now that I've hopefully convinced you that you need to incorporate a distributed cache in your application that the first question that comes to mind is, well, what do I do with it? How do I use it? So, there are three main categories in which you can use a distributed cache.

There are others but for most of the discussion there the three really high-level.

App Data Caching

Number one is application data caching. This is what I've been talking about up until now. Whatever data is in the database, that's what I'm calling application data, you're caching it so that you don't go to the database. Now, keep in mind, and with each of these, with an application data caching the nature of the problem is that the data exists in two places; the cache and the database. And, whenever data exists in two places, what's what could go wrong? Out of Sync! Yeah! So, the data could get out of sync. So, if your data and the cache was out of sync from the database, that could create a lot of problems. I could withdraw a million dollars twice assuming I had to begin with, but, let's say that.. That's the first problem that comes to my mind.

Any cache, any distributed cache that is not able to handle that problem, means that you're limited to read-only data. But, what we call reference data. And, that's how caching began as to be understood cache read-only data my lookup tables. The look-up table is about 10% of your data. In some applications it's not but an average application. 90% of your data is not look up data. It’s transactional data. It's your customers, your activities, your orders, everything. So, if you're not able to cache all of those, then the benefit, let's say 10% of the data, maybe the lookup is really being done more than its fair share. So, that 10% could give you the benefit of 30% but you still have 70% of the data that you have to go to the database. But, if you could cache all data, which you would only be able to if you get that comfort, then you will get the real benefit.

ASP.NET Core Specific Caching

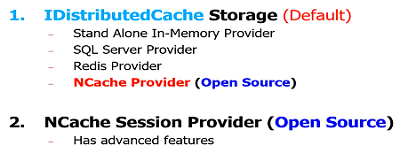

The second use case or the second way of using Is, what I call ASP.NET core specific caching and there are three ways. One is the sessions which is the most commonly understood. ASP.NET had them ASP.NET core has them. They're here to stay. I don't think sessions are going to go away, even though people some people argue that we should not use sessions. There's no there's no harm if you use them. The only problem with them is that where do you store them. That was always the problem with ASP.NET. Whatever storage options Microsoft gave you were all full of problems. So, the only option at that time was to use a distributed cache which you could fortunately plug in as a custom option in the ASP.NET days. In ASP.NET core, Microsoft does not have a built in or they do have an image like a standalone in-memory but they straightaway go into an IDistributedCache or custom session provider which you can plug in to a third-party distributed cache like NCache.

Sessions is the first, the second is response caching. I was going to say who doesn't know about response caching but that's not a good way to ask. Response caching is like a newer version of output cache but it's more standards-based. It's the page output that you can cache and I'll go over it but that's also something that you can cache and plug in a distributed cache as a middleware for it.

And, third is if you have a signal or application which is the live web app. Live web apps are those where, let's say, if you have a stock trading application that needs to constantly propagate all the stock price changes and it's got hundreds of thousands clients. They're all connected so they'll all stay connected to the caching or the application tiers. It's unlike the regular HTTP where for every web request a new socket connection is open. Here, the socket connection stays open and events are propagated from the server. So, in a SignalR, when you have a higher transaction, large number of users, you got to have a load balanced web form but since the sockets are kept open so every server is going to talk to its own client but now that all the clients or all the servers have different sets of clients so they need to share data. So, that data is shared through a backplane and that backplane becomes... So, that's where you plug in a distributed Cache. I'm not going to go over the details of that Specific. I'll go over the first two but not the third one.

Now, the specific thing about ASP.NET core specific caching is that the data exists only in the cache. This is what I was saying don't put it in the database. There's no need. This is temporary data. You only need it for 20 minutes, 30 minutes, hour, 2 hours, whatever after that the data needs to go away. So, if it's temporary then it should only exist in the cache and when the data only exists in the cache and it's an in-memory cache, what could go wrong? You could lose data because it's all in memory. Memory is volatile. So, a good distributed cache has to handle that situation, of course, that means you need to replicate.

Pub/Sub Messaging and Events

The third use case is a lot of applications need to do workflow type of operations. Microservice is a very good example of it. They need to coordinate activities, although microservices not the topic but even ASP.NET core applications occasionally need to do that. So, pub/sub messaging is another way because you have, again keep in mind, you have an infrastructure in place where all of these boxes are connected to this redundant in-memory infrastructure. So, why not use it for pub/sub messaging also?

So, those are the three common use cases. So, these are the three ways that you should use NCache or a distributed cache if you want to benefit, if you want to maximize the benefit.

Hand-on Demo

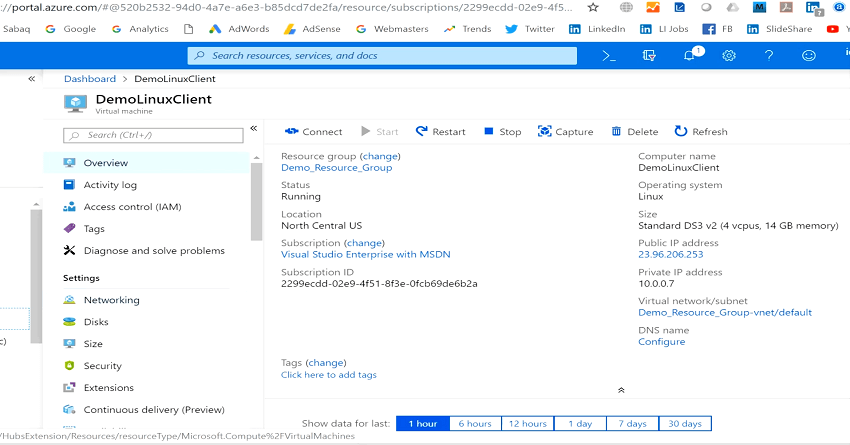

So, I'm going to quickly, before I go into how you actually use them, I want to actually show you what a distributed cache looks like. I'm going to use Azure as the environment and I'm going to use NCache. And, it's just a quick demo of…

Setting Up an Environment

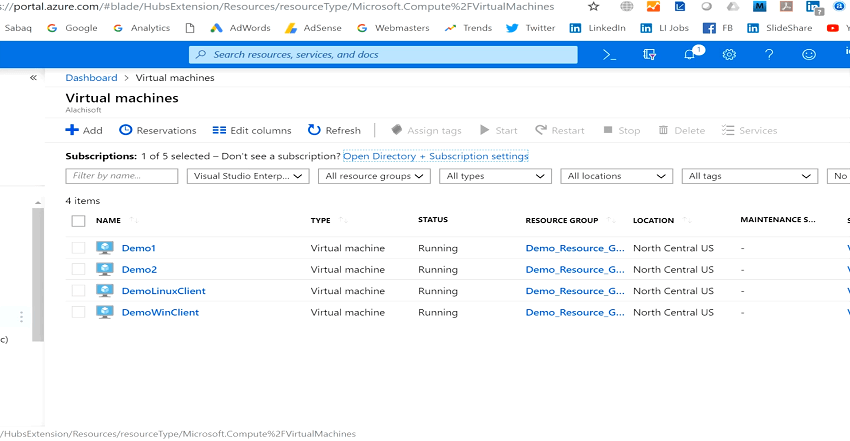

So, I'm logged into Azure. I've got four VMs, again these are all .NET core.

So, I've got four VMs in Azure. Two of these I'm going to use as my cache server VMs which is basically-- these are those two… I'm going to have one Windows client and one Linux client. So, if you have because .NET core supports Linux. If you have .NET core as the application you may want to deploy this on Linux. Now, in case of NCache, again, I'm not marketing NCache. But in case of NCache, it is .NET core so it can run on either Windows or Linux, both the servers and the clients.

Okay! Let's go into… So, I'm logged into this client, the Windows client box. So, I'm going to now go ahead and create a cache for myself.

Create a Clustered Cache

So, I'm going to use this tool called NCache, the NCache manager actually does not come with open source. There's a command-line equivalent of it but I'm just being lazy here. So, I'm just going to use NCache manager but it's the same functionality. The functionality doesn't change so I just launched NCache manager. I'm going to say create a new cache. You just named a cache. All the caches are named. Think of that as a connection string to the database then you pick a topology. I'm not going to go into the topologies, yet I'll go into it toward the end about what a cache needs to do to be able to handle all those needs. So, I'm just going to use partition replica cache topology. Asynchronous replication. I will add my first cache server. 10.0.0.4 and the second one 5. So, I've got two cache servers. I'm going to keep all of it as defaults. I'm going to evictions and all the others.

And, I'm going to now specify two clients. 10.0.0.6 and 7. I'm going to come here and I'll just start the cache. So, think of it just like a database, instead of one server you've got multiple servers that constitute the cluster and you create a cluster of cache servers and then you assign clients. You don't have to assign the clients because you can just run them but I'm doing it here because it gives you some more flexibility.

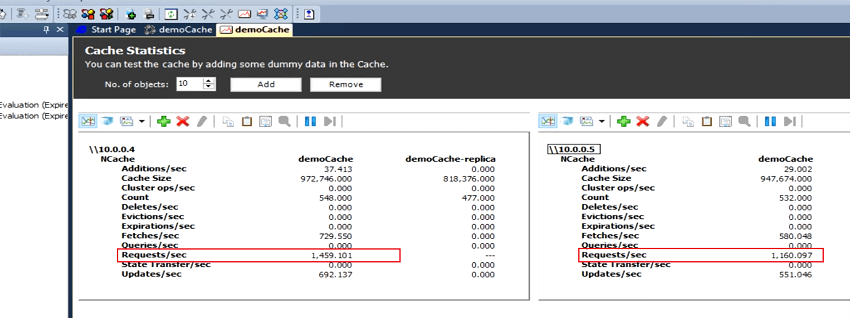

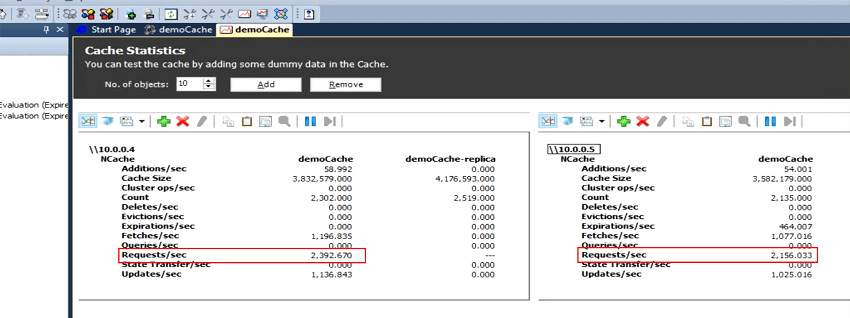

Simulate Stress and Monitor Cache Statistics

Then, I want to go and test the client. So, I am the client. I think I'm 10.0.0.6. let me just make sure which one am I. I think I'm 10.0.0.6 I think. Come on! So again, I'm doing everything within the Azure virtual network so the client actually is the application server and the cache are all together now. In case of NCache you could have gotten this from the azure marketplace. So, .6 is the Windows client. So, I'm going to launch PowerShell and I'm going to make sure that I can see. Okay! Okay! So, you'll see that this client is going to talk to the cache and now I'm doing about five six hundred requests per second in each of the cache server. Let me add some more load. I'm going to open another PowerShell and I’ll do stress another instance of the client. Now, you'll see that it will go up to about more than a thousand to thirteen hundred per server.

and now let's do… I'm going to actually… So, I've opened up a command prompt here. Let me log into the Linux box. Oops! And… Sorry! We start PowerShell there. I need to import the partial libraries of the clone of NCache and I will do the same stress demo cache so I'm going to… Before I do this, as you can see, I'm doing about thirteen to fifteen hundred per server, add this as a Linux-based client talking to the cache and now suddenly I have almost two thousand five hundred requests per server.

So, I'm doing about five thousand requests per second on a two-server. As I said, you can add more and more load and as you max out those two boxes which you will then you add a third one, then you add a fourth one. So, that it's as simple as that and again this is a simulation. It's an actual thing like a stress test tool. So, this is what you have to keep in mind. I'm going to continue with the actual cache now. It's a stress test program. It just puts in like a byte array. I think it puts in 1k of data. It does add, it does update, it does get. So, it simulates whatever your actual application and it has parameters that you can change to see what is the ratio of read versus writes as we were talking about. And, this is a tool that we give with NCache, so that you can actually test it in your own environment. So, to see how NCache is actually performing before you actually spend a lot of time migrating your application to it. Let me just quickly go through. I think I'm running that.

ASP.NET Core Session Storage

So, now that we've seen what a cache looks like, how you can add more clients and how you can add more load on it, it's very simple. So, the easiest way to use a cache is to put sessions in it. And, for sessions there are two things that you can do.

Either you can just use an IDistributedCache provider. Let's say NCache has one. As soon as you specify NCache as IDistributeCache, ASP.NET core starts to use it for storage of sessions. And, let me actually show you some of that. I've got this ASP.NET core application. As you can see here. In my configure services, I'm specifying This, I'm saying make NCache my distributed cache provider. So, as soon as I do this, it starts to and then I'm using standard sessions and these standard sessions will use IDistributedCache which is now using NCache. So, whatever distributed cache provider you have, that's all you have to do. As you can see, very small code change and your entire application is automatically, all the sessions will immediately be put into a distributed cache.

public IConfigurationRoot Configuration { get; }

// This method gets called by the runtime. Use this method to add services to the container.

public void ConfigureServices(IServiceCollection services)

{

// Add framework services.

services.AddMvc();

//Add NCache as your IDistributedCache so Sessions can use it for their storage

services.AddNCacheDistributedCache(Configuration.GetSection("NCacheSettings"));

//Add regular ASP.NET Core sessions

services.AddSession();

}And, one of the things that we've seen is when many of our customers, when they're storing sessions in the database and they're having issues, for them to plug in something like NCache is instantaneous and the benefit that they see, remarkable improvement is instantaneous. The least amount of effort the maximum gain is there. Because, of course, the application direct caching is there too.

I'm going to skip a few because I think… So, there are multiple ways that you can specify sessions. One was the IDistributedCache, the second is that you can actually use a custom session provider which NCache also has and again all of these are open source. Then for response caching, again, you do the same thing. You specify NCache as the distributed cache and it automatically becomes the cache for the response caching. I'm not going to go into more detail.

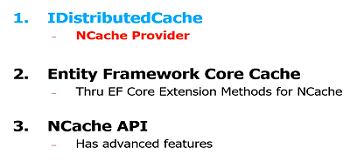

ASP.NET Core App Data Caching

I want you to touch base on this topic a little bit more. So, when you do application data caching, unlike sessions, you actually have to now do API programming. Unless you're doing entity framework core.

So, for example, in case of NCache, again open source, there's an EF core provider. So, we've implemented extension methods for EF core. So, you can actually plug in NCache open source and use your regular EF core queries and at the end you can say from cache or from database, something, it’s just an extension method that automatically starts to cache stuff. It gives you total control over what you want to cache, what you don't want to cache. Do take a look at it. It's a really powerful way.

If you are doing EF core which is what I recommend that you do. I think, for any ASP.NET core application, all the database programming should be done through EF core especially since the EF core is a much nicer architecture than the old EF. So, that's one way to do it.

The other is that you can actually make these. You can use the IDistributedCache API or the interface which gives you flexibility to not be locked into one caching solution but it comes at a cost. It's very basic. There's only a get input and that's basically, well, there's a remove also. That's about it. And, all the things that we talked about, if you can't keep the cache synchronized with the database, all of those things you lose all of that. So, if you want to benefit from all those features then you've got to go into the API that actually supports all of those. And again, because you're going to be committing to an open source cache, it's usually easier to do that but uh the application direct caching, the API is very straightforward, there's a key and there's a value. The value is your object.

Keeping Cache Fresh

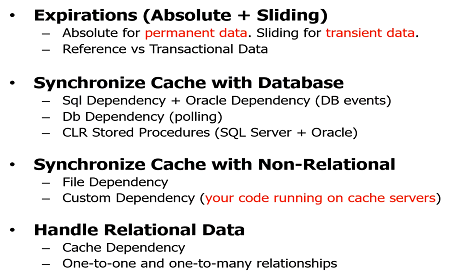

Now, let me come to that topic of what allows you to keep the cache fresh. The really valuable information, right? What do you do?

Well, the first thing almost every distributed cache does, expirations! You do absolute expression. Whatever you put in the cache, you say remove it from the cache five minutes from now. So, it's safe to keep in five minutes, I think in five minutes I'm the only one updating it after that other people might. So, NCache has it, everybody else also has it. Transient… there's a sliding expression for transient data like sessions and others that after you're done using it, if it's not touched for a certain amount of time, let's say sessions, when the user logs out after 20 minutes of inactivity, the session expires. So, the same thing happens. Expirations, pretty much everybody has them.

Next is the synchronized cache with the database. This is a feature that allows you to have the cache monitor your database. So, you can actually… when you're adding stuff to NCache, let's say, you can have a SQL dependency which allows NCache to monitor. SQL dependency is it's a SQL server ADO.NET feature that we use. SQL dependency allows you specify SQL statement or store procedure that allows NCache to monitor your SQL server database, that specific data set. And, if any changes happen in that data set, SQL server notifies NCache and any cache removes that data from the cache or if you combine that synchronization with a read-through feature then it reloads it. So, let's say you had a customer object that was cached and it was SQL dependency and that customer got changed in the database which is customers transactional data so it's going to change frequently. You can use read-through and automatically reload upon either expression or database synchronization.

So now, suddenly your cache is responsible for monitoring the data. There are three ways that you can do that, there's SQL dependency, DB dependency and seal are CLR stored procedures. I'm not going to go into the detail of it. You can go to our website and read it. But again, most important thing is, if you don't have synchronization of cache with the database as a feature then you're limited to that read-only and static data. And, then that limits the whole thing and again you can synchronize this cache with the non-relational data source also.

And, the third thing is that you may have a relational… most of the time you are going to be caching relational data, which has one-to-many one-to-one relationships. So, let's say, one customer has multiple orders. If you remove the customer, the orders should also not stay in the cache because maybe you've deleted it from the database. So, if you don't do that then your application has to do all of this but there's ASP.NET cache object has this feature called key dependency feature. NCache has implemented it that allows you to have a one-to-many or one-to-one relationship between multiple cache items and that if one thing gets updated or removed the other thing is automatically removed. So, those four things combined allow you to make sure that your cache stays fresh and consistent with the database. And, this is what allows you to then start to cache data. So, that's the first benefit.

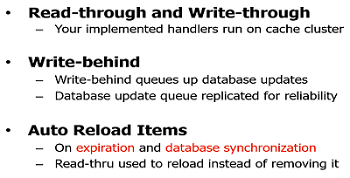

Read-thru & Write-thru

The second is that if you can use read-through and write-through, it simplifies your application.

Read-through, as I said, you can combine read-through for auto reload which is something that your application would not be able to do if you didn't have read-through. And, write-through again, you can update the cache and cache can update the database especially if you have a right behind then, again we're talking performance, right? So, all of the updates have to be made to the database. Well, and the updates are going to be slow compared to the reads from the cache. So, what if you could delegate the updates to the cache and say I'm going to update the cache, why don't you go and update the database for me. I know it's safe because and we're going to work on the eventual consistency model which is what distributed systems are all about that you sacrifice or you become more lenient on consistency and you go with eventual consistency model. Which means that even though at this point in time it's not consistent, because the cache is updated the database isn't, well within a few milliseconds it's going to get updated. And, some data you cannot afford to do that but a lot of data you can.

So, by doing right-behind, suddenly your updates are also super-fast your application does not have to incur the cost of updating the database. The cache does because which is a distributed cache and it can absorb that as a batch and there are other. So, once you start to do this, that means now you can cache a lot of data.

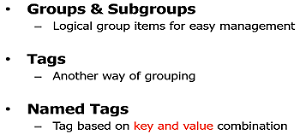

Data Grouping

Once you cache data, more and more data, then your cache becomes as rich almost as a database that means you are caching almost all the data.

When you cache almost all the data then key value is not enough to find things. You got to be able to search things. AppFabric used to have groups and subgroups, tags named tags, by the way, NCache open source has AppFabric wrapper. So, those of you who got AppFabric can move to NCache as a free thing. So, grouping allows you to fetch data easily.

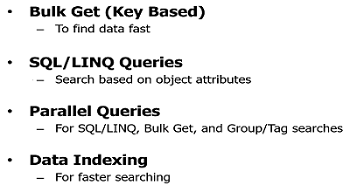

Finding Data

SQL searching allows you to find things like, give me all my customers where the city is New York. So, now you doing database type of queries in the cache. Why? Because your cache is not containing a lot of data.

The whole paradigm is shifting because you're going with more and more in-memory. Again, the database is the master. So, the cache still only has it for temporary period of time.