The rise of container technology has revolutionized application development, with Kubernetes leading the charge in container orchestration across multiple machines. Kubernetes is an open-source platform that handles the orchestration of your containers on multiple machines. One of these Kubernetes platforms, Red Hat OpenShift provides users with the ability to auto-scale cloud applications. It has application containers powered by Docker and relies on Kubernetes for orchestration and management services. It provides an integrated deployment architecture for managing the containers using Kubernetes orchestration services. As such, OpenShift is gaining popularity for this simple container architecture.

One product that seamlessly integrates with OpenShift is NCache. As an in-memory distributed caching solution, NCache enhances performance and scalability by reducing your network trips and the database load as your data resides in the cache, closer to your application. This article focuses on the steps required to deploy NCache within Red Hat OpenShift, ensuring optimal performance and scalability for your applications.

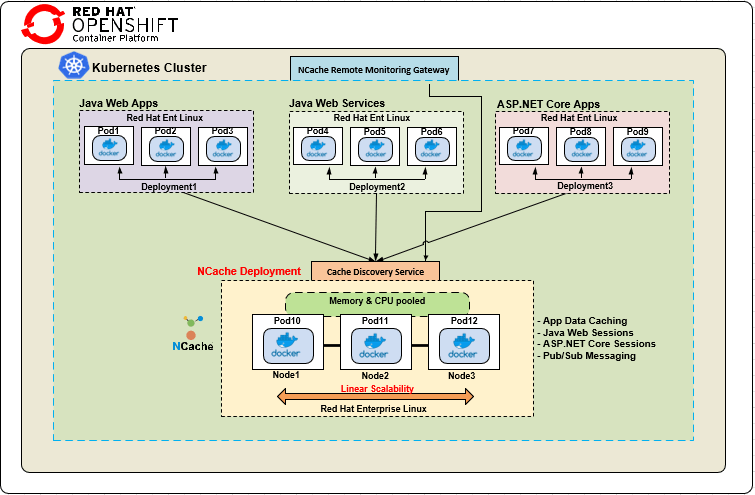

NCache Deployment Architecture in Red Hat OpenShift

Using NCache, you can optimize your OpenShift cloud orchestration with advanced features in an easy-to-manage container setup. You can start with a single Kubernetes cluster, deploying various applications and, importantly, the NCache cluster.

Currently, the Docker-based container applications running in the environment are:

- Java Web application

- Java Web Services application

- ASP.NET Core application

These applications have NCache installed; specifically, the Java applications use the NCache Java clients, whereas separate deployments for ASP.NET core applications uses a Docker image on Linux and employs the .NET Core client for NCache communication. For server-side deployment, it uses a Linux-based Docker image for NCache available on Docker Hub.

The applications connect to a headless service called the Cache Discovery Service within Kubernetes. It manages routing and resource allocation within the NCache cluster. Similarly, a remote monitoring gateway also connects to this service, allowing you to monitor the cache cluster from outside Kubernetes for operations like cache management.

While working with Kubernetes, we use pods. Essentially, in Kubernetes, IPs are assigned to pods. A pod is a Kubernetes object encapsulating the underlying container instance while acting as a virtual layer on top of a container. A single pod can have multiple containers, but it is recommended for a single pod to contain a single container only. In short, all the resource allocation in a Kubernetes cluster occurs on the pod instead of the container.

The following diagram gives an overall depiction of the architectural flow of the NCache deployment:

Figure 1: NCache Deployment in Red Hat OpenShift

Step 1: Deploy NCache Servers

Deploying NCache servers in Red Hat OpenShift requires you to create a YAML file with your NCache configurations. These YAML deployments contain all your application components and are easy to deploy. Make sure to adjust these components according to your requirements. Given below is the sample YAML file with the configurations:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

apiVersion: apps/v1beta1 kind: Deployment metadata: name: ncache labels: app: ncache spec: replicas: 2 template: metadata: labels: app: ncache spec: containers: - name: ncache image: docker.io/alachisoft/ncache:latest ports: - name: management-tcp containerPort: 8250 - name: management-http containerPort: 8251 - name: client-port containerPort: 9800 |

Kubernetes is evolving quickly and keeps introducing new features, which swiftly become part of the core API. However, several features do not follow this trajectory due to their experimental nature. Therefore, in these cases, set the “apiVersion” accordingly. The version used here is “v1beta1”, which depends on the underlying Kubernetes version. So, ensure you are not using an obsolete version.

The ports mentioned in the deployment file include the following:

- Port 8250: For TCP management.

- Port 8251: For HTTP management and monitoring.

- Port 9800: For communication between the client applications connecting to NCache.

First, we set the “kind” tag to deployment. The next thing is the number of replicas (2 in this case), and you can increase it according to your logic. For further detail on pod replicas, please refer here. For containers, you need to specify the Docker image by providing the path of Docker Linux Server image for NCache Enterprise available on Docker Hub. The general command to pull this Docker image is:

|

1 |

docker pull alachisoft/ncache:latest |

Once you create the YAML file with all the necessary configurations, you need to import the file using the OpenShift web console. Create a new project with a name of your choice and import the YAML file into the project containing the NCache deployments. You can also use the OpenShift CLI tool, which shows the status of the deployments.

Step 2: Create Cache Discovery Service

As previously established, the Cache Discovery Service is responsible for routing all the NCache communication to underlying pods. Serving as the central communication gateway, this headless service routes client application requests to the NCache cache clusters. It retrieves the IP addresses of the underlying NCache server pods in the Kubernetes cluster, ensuring seamless interaction between client applications and the cache servers.

To create such a service, you have to build another YAML, as demonstrated below:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

apiVersion: v1 kind: Service metadata: name: cacheserver labels: app: cacheserver spec: clusterIP: None sessionAffinity: ClientIP selector: app: ncache ports: - name: management-tcp port: 8250 targetPort: 8250 - name: client-port port: 9800 targetPort: 9800 |

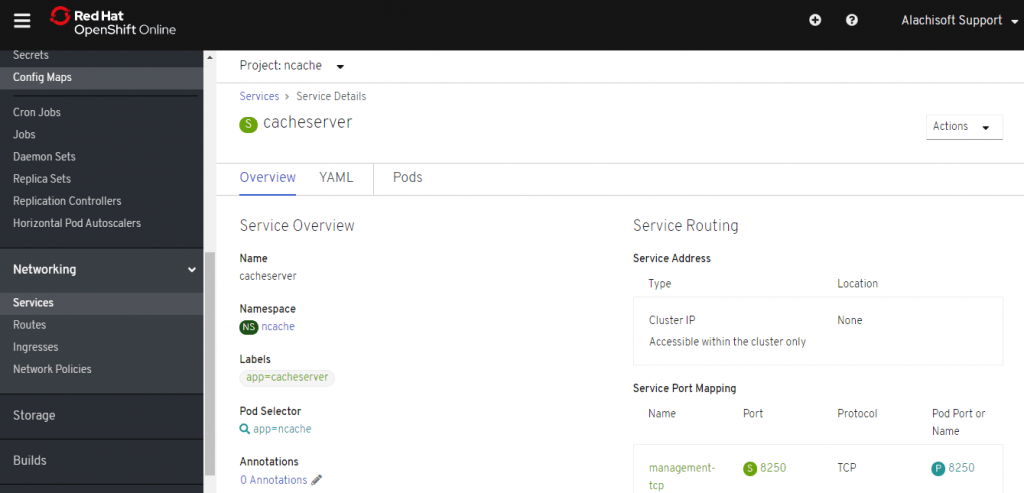

It is named cacheserver here, but you can rename it according to your specific configurations. In this case, the “kind” is service. Furthermore, it contains ports with the name and port numbers necessary for communication along with the discovery service. The “sessionAffinity” is set to the ClientIP – ensuring that the management and monitoring operations outside the Kubernetes cluster are consistently directed to one of the pods at the given time. After creating the YAML file, import this file through the wizard, and it automatically builds your Cache Discovery Service, as shown in the image below.

Figure 2: Create Cache Discovery Service

Step 3: Create a Management Gateway

This step arranges the management and monitoring operations outside the Kubernetes cluster. Any management operations taking place route through this gateway to this Cache Discovery Service, and that helps you manage and monitor all the underlying pods as well.

To create the management gateway:

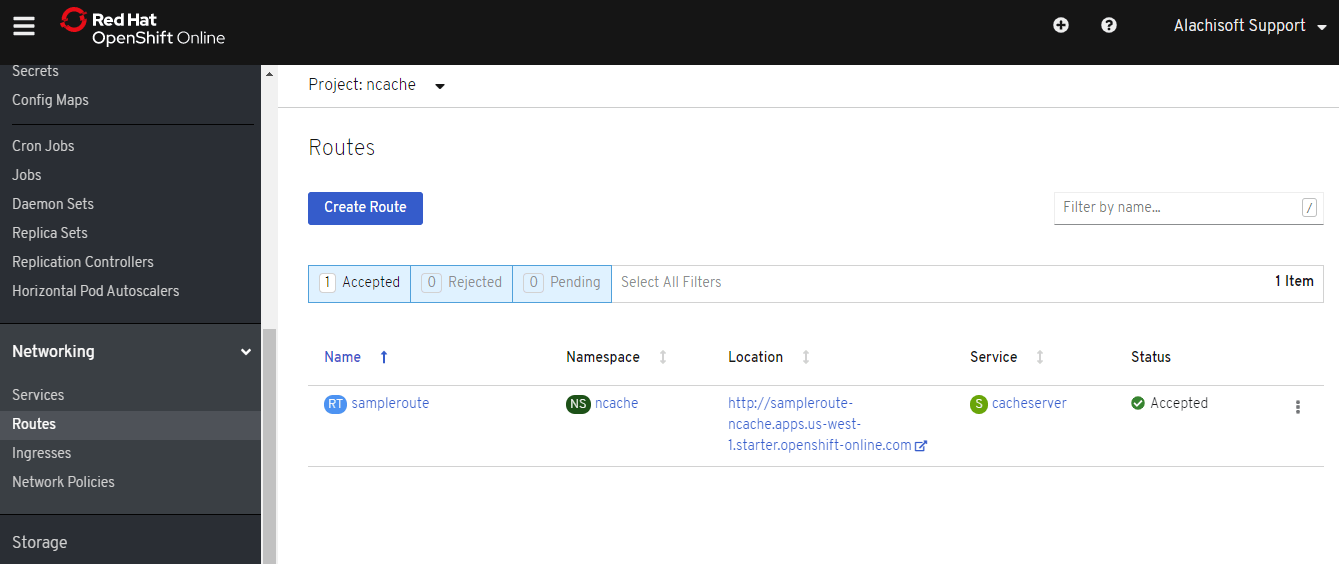

- Go to the “Networking” section of the OpenShift portal.

- Choose a “Route” from the drop-down menu.

- Create a route to the headless service – a.k.a, the Cache Discovery Service.

- Provide a name for the route and select the service “cacheserver” created in the previous step. Additionally, provide the target port 8251 for management and monitoring outside the Kubernetes cluster.

Figure 3: Create a Management Gateway

5. Once created, select the location path that redirects to the NCache Management Center on one of the cache server pods for the “Location”.

Step 4: Create a Cache Cluster

Now that we have successfully deployed NCache in Red Hat OpenShift, we can create a cache cluster using the NCache Management Center.

Create the cache cluster following the steps in the documentation and ensure that the IPs used are the same as those of the IPs of your cache pods. To get the IPs of the cache pods, go to the “Pods” section from the OpenShift web console or the command-line tool. Once the cache creation process is complete, start the cache using the Management Center.

Step 5: Deploy Client Applications

You can now deploy and run your client applications by creating a YAML file containing the deployment for clients. We import this deployment file using the OpenShift portal. The client applications can be .NET Core or Java as required.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

apiVersion: apps/v1beta1 kind: Deployment metadata: name: clientapp labels: app: clientapp spec: replicas: 1 template: metadata: labels: app: clientapp spec: containers: - name: clientapp image: your-client-application-repo-path ports: - name: management-tcp containerPort: 8250 |

Here, to connect to the cache, we do not need the IP addresses of the cache pods. The Cache Discovery Service we created with the name “cacheServer” provides the IP address of cache pods to our client application at runtime. The NCache client has built-in logic to talk to its named service and automatically discover all the underlying resources within the OpenShift Kubernetes platform.

Hence, the NCache client is intelligent enough to connect to a fully connected cluster by providing the name of the service.

Step 6: Monitoring NCache Cluster

NCache comes with various tools to help you monitor your cache cluster. Monitoring your cache cluster gives you real-time information about cluster health, cache activity, the number of operations taking place, and much more. You can also monitor your cache cluster to take suitable measures for network disruption, memory overheads, etc.

The NCache Management Center is a management tool provided by NCache to configure the caches and monitor their performance. Similarly, the NCache Monitor is a web management tool that lets you monitor the real-time cache performance.

Step 7: Scaling NCache Cluster

NCache is a distributed caching system with a very scalable architecture. So, to achieve an enhanced capacity and functionality for NCache in your OpenShift environment, you can scale your NCache cluster by adding more pods. There are multiple ways of getting this done. Beginning with the OpenShift web portal:

- Go to “Deployments”.

- Click the “Edit Count” button.

- Increase the number of pods by clicking the “+” button.

Proceeding with these steps automatically increases the replica count in your deployment file, as per the number of pods added. It can also take place using the OpenShift CLI (oc) tool. Note that by adding these pods, you create another pod, but it does not become a part of the cluster by itself. So, to add cache servers to a running cache cluster, go to the Server Nodes page from the NCache Management Center and add a server IP for adding that server node in the cluster. A server node gets added to your cache cluster at runtime and it improves performance drastically with NCache’s easy scaling.

Conclusion

Essentially, NCache deployment in Red Hat OpenShift is an easy-to-follow procedure that leverages the benefits of containerization. Containerization is an emerging technological necessity of today’s world for the lightweight nature they offer. NCache is an extremely fast distributed caching solution, and with Red Hat OpenShift, you can easily manage your containerized Kubernetes cluster. So, step into the world of NCache to run your applications on the Kubernetes cluster with just a few easy steps.