All around the globe, the victory of containerization over virtualization has brought forth performance and reliability between applications running in different computing environments. The concept of containers is that they isolate software codes and all their dependencies to ensure uniformity and consistency in any infrastructure. Amazon, just like many other cloud platforms, is rapidly maturing its Elastic Kubernetes Services to meet the growing computing needs of the AWS clients.

While Amazon Elastic Kubernetes Service in itself is a fully managed, secure and reliable Kubernetes service, it still requires a lot of manual configurations to manage the clusters. To assure your application’s performance in such an environment, you need to use NCache. NCache is an in-memory caching solution that boosts your application’s performance and induces scalability by improving latency in your EKS cluster.

NCache Details Container Deployments NCache EKS Docs

NCache Deployment Architecture in Elastic Kubernetes Service

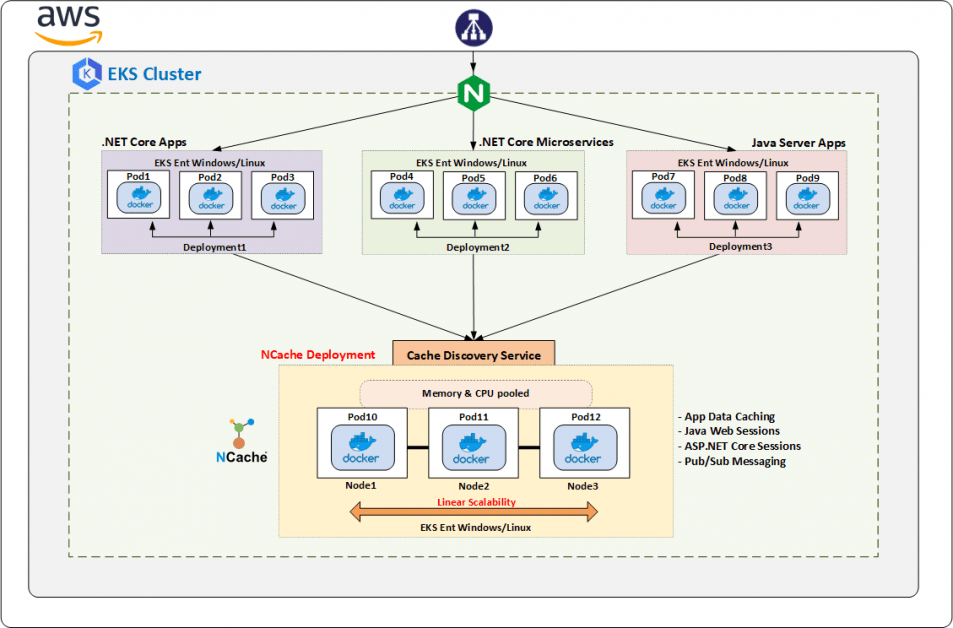

The basic structure of how NCache fits into your EKS cluster is very simple. You have a classic load balancer on the AWS cloud that routes HTTP requests to an ingress controller running within the EKS cluster. Inside this cluster, you have your cache cluster running NCache servers inside multiple pods. These pods are mapped to a Cache Discovery Service that allows client access to the cluster pods that are running the cache service. You can have multiple applications deployed on multiple pods and they will all be connected to the cache cluster through this Cache Discovery service.

Inside this EKS cluster, you also have an NCache Remote Monitoring Gateway service. This is an NGINX Ingress Controller that provides load balancer configurations to bring the traffic down to specific pods with sticky sessions enabled. The remaining part of the cluster comprises of various client applications, each in its own deployment environment.

The flow of requests and the structure of an EKS cluster with NCache deployed in it is shown in the diagram below.

Figure 1: NCache Deployment in Elastic Kubernetes Service

NCache Details Container Deployments NCache EKS Docs

So, without any further delay, let me take you through a step-by-step easy guide to how you can deploy NCache inside your AWS Elastic Kubernetes cluster.

Step 1: Create NCache Resources

To use all the functionalities NCache provides you inside your Amazon Kubernetes cluster, your immediate step right now should be to deploy NCache resources inside EKS. Deploying NCache will allow you to perform all management operations in your cluster.

You can deploy NCache with the help of certain YAML files. Each of these files contains specific information that plays its part in the seamless working of NCache inside EKS. These files are:

- NCache Deployment file: This file contains the actual specification of the required pods and images that need to be used. This information includes the replica count, image repository, ports required, etc.

- NCache Service file: This file builds a service on top of the deployment. The main purpose of this file is to expose the deployment from the server.

- NCache Ingress file: This file contains the information needed to create a sticky session between a client application and Web Manager running inside the Kubernetes cluster.

These are the basic YAML deployments that you need to deploy NCache services inside your EKS cluster. Among all these files, the most important one is NCache Deployment YAML file. This file looks like this:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

kind: Deployment apiVersion: apps/v1beta1 metadata: name: ncache-deployment labels: app: ncache spec: replicas: 2 template: metadata: labels: app: ncache spec: nodeSelector: "beta.kubernetes.io/os": linux containers: - name: ncache image: docker.io/alachisoft/ncache:enterprise-server-linux-5.0.2 ports: - name: management-tcp containerPort: 8250 - name: management-http containerPort: 8251 - name: client-port containerPort: 9800 |

Once you create this deployment by executing the following command in AWS CLI, Kubernetes will create the exact number of pods that’s mentioned under the replica tag. On each of these pods, you will have a running container. The image this container is made from is provided with the image key. In your case, this will be the path to the NCache enterprise server that is placed on DockerHub. The ports tag holds all the ports that need to be exposed for NCache services to function in the cluster.

|

1 |

kubectl create -f [dir]/filename.yaml |

Refer to NCache docs on Create NCache Resources in EKS.

NCache Details Container Deployments NCache EKS Docs

Step 2: Create NCache Discovery Service

The previous step leads you to set up the server side of NCache. After that has been successfully executed, you need to work on creating a discovery service that exposes your NCache resources to the client applications.

Outside the Kubernetes cluster, static IP addresses are required for successful client communication. However convenient that might be, inside the Kubernetes cluster, every deployed pod is assigned a dynamic IP address at runtime which remains unknown to the clients. This anomaly causes communication problems inside the cluster, where client applications fail to identify NCache servers. Here, the need of a headless discovery service inside your EKS cluster for you NCache clients arises.

This headless service resolves this issue by exposing the IP addresses of the NCache servers to the client applications. These clients use these IPs to create the required cache handles and to start performing cache operations.

To let all clients connect to the headless service with ease, create and deploy a Cache Discovery YAML file as provided:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

kind: Service apiVersion: v1 # depends on underlying Kubernetes version metadata: name: cacheserver labels: app: cacheserver spec: clusterIP: None selector: app: ncache # same label as provided in the ncache deployment yaml ports: - name: management-tcp port: 8250 targetPort: 8250 - name: management-http port: 8251 targetPort: 8251 - name: client-port port: 9800 targetPort: 9800 |

What makes this service a headless service is the tag clusterIP set to “None”. This behavior states that this service is specific to NCache and will not be accessible outside the EKS cluster. Tag selector set to ncache helps this service identify all pods whose label is ncache so that their IPs can be exposed to the clients.

A little insight into how things work: to the client connecting to the servers, only one IP address will suffice as the one server it connects to shares the IP addresses of all the servers that are a part of that cache cluster.

Once the file is ready to be deployed, execute the following command in the AWS command line interface.

|

1 |

kubectl create -f [dir]/cachediscovery.yaml |

For a detailed step-by-step deployment, follow our documentation on Create Discovery Service.

NCache Details Container Deployments NCache EKS Docs

Step 3: Create Access for NCache Management

To expose allow NCache management access from outside the cluster, you need to set up is an ingress controller. This controller abstracts the basic load balancer strategies that you normally use in container deployments. A frequently used ingress controller is the NGINX Controller which when deployed, is responsible for creating all the services that are required to expose NCache services outside the cluster.

To deploy the NGINX Ingress Controller in your EKS cluster, you need to create multiple file deployments in it. These files contain all the necessary information required for your Kubernetes cluster to install a fully functioning NGINX load balancer.

Listed below are the required files with a brief explanation of what they do and why they are required:

- NGINX Mandatory file: These files are the necessary base files that you need to run the NGINX Controller; which in your case will be a load balancer inside your EKS cluster. You can find this file on GitHub.

- NGINX Service file: This file contains the information on the layer7 load balancer which exposes the NGINX Ingress Controller to outside the Kubernetes environment.

- NGINX Config file: This file contains all parameters required to configure the Layer7 load balancer.

Among the aforementioned files, NGINX Service YAML is the file that contains the ports information required to create a load balancer aware of NCache management access. The contents of this file are shown below:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

kind: Service apiVersion: v1 metadata: name: ingress-nginx namespace: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx annotations: ... spec: type: LoadBalancer selector: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx ports: - name: http port: 80 protocol: TCP targetPort: http - name: https port: 443 protocol: TCP targetPort: http |

All these files are created as YAML files that are very easily deployed inside the EKS cluster. All you need to do is run the following command for each file in the AWS configured command line tool.

|

1 |

kubectl create -f [dir]/<filename>.yaml |

On execution, this command will create a load balancer that enables stickiness inside your cluster. For detailed information, refer to our documentation on Create Access for NCache Management.

NCache Details Container Deployments NCache EKS Docs

Step 4: Create Cache Cluster

Now that you have your collective deployments and services in place, you need to create an NCache cluster to let your clients connect to the cache servers.

When you deployed NCache service, you secretly deployed NCache Web Manager too that comes fully integrated with the NCache management operations. You can use this NCache Web Manager to create your clustered cache and play around with it. All you have to do is follow these basic steps provided in NCache docs on Create Clustered Cache and you are good to go! Here, however, what needs your utmost concentration is that the IPs of the server nodes that you need to add should be the same as the IPs of the server pods assigned by the Kubernetes cluster. You can get the list of these IPs and their detail by executing the get pods command in AWS command line tool.

Step 5: Create Client Application Deployment

NCache client deployment, just like NCache resource deployment, specifies the number of running client image containers, the private Dockerhub repository where the application is placed, ports, and so on. This information helps you create a fully functional client container.

To get the client application from a private repository, you need to mention login credentials every time. To save yourself from this occurrent hassle, you can create a secrets.yaml file that contains your login information which needs to be populated once and is accessible to every calling client resource. Refer to NCache docs on Create Client Deployment to get a detailed view of the steps and YAML files.

Similarly, the actual client deployment is created in the form of a YAML file too. This file will contain every bit of information necessary to deploy your client application (or applications; your call) in your EKS cluster. The content of this file is shown below:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

kind: Deployment apiVersion: apps/v1beta1 # it depends on the underlying Kubernetes version metadata: name: client spec: replicas: 1 template: metadata: labels: app: client spec: nodeSelector: "beta.kubernetes.io/os": linux containers: - name: client image: # Your docker client image here ports: - name: app-port containerPort: 80 # In case of NCache client installation, add the following remaining ports - name: management-tcp containerPort: 8250 - name: management-http containerPort: 8251 - name: client-port containerPort: 9800 |

When it comes to deploying the containers from the image, the process that follows goes like this:

1. The image is accessed from the repository.

2. The client secrets file is read from the NCache secret resource for authentication.

3. The image is pulled and deployed in a container with the client application running in it.

Here, as we have a deployment and not a service, what you need to do is to go into the pod and execute the batch command in AWS CLI to start running the client application.

|

1 |

kubectl exec --namespace=ncache client-podname -- /app/<clientapplication>/run.sh democlusteredcache cacheserver |

NCache Details Container Deployments NCache EKS Docs

Step 6: Monitor NCache Cluster

Till this point you have done everything that you need to get the best out of NCache inside your fully-functional, running Amazon EKS cluster. You get high-availability, scalability, reliability, and what-not simply from deploying NCache in the cluster. But guess what? This isn’t all that NCache offers.

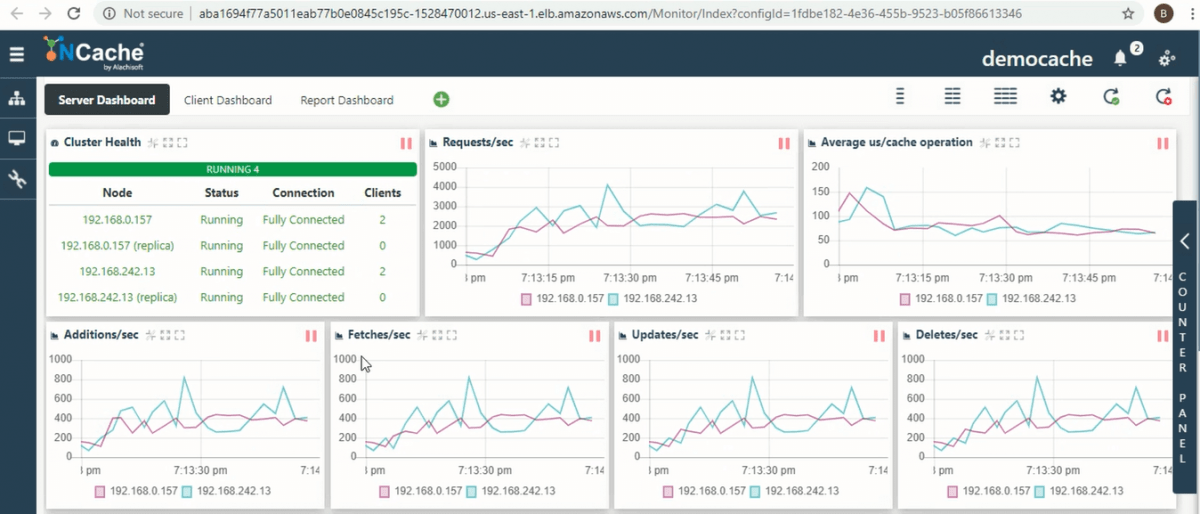

Hear me out. Inside the EKS cluster, in the middle of all the operations, the storing, and data transfer, NCache allows you to monitor cache activity through various tools. These tools help you get a better idea about your cluster’s health, performance, network glitches, and more.

Check out NCache Web Monitor for a graphical depiction of real-time performance and NCache Statistics for performance stats.

Figure 2: NCache Web Monitor EKS Caches

Step 7: Scaling NCache Cluster

To provide you with extreme scalability, NCache allows you to scale your cluster up or down at runtime to enhance the overall performance of your application. For instance, if you feel that the cache cluster is receiving requests way too frequently for the nodes to keep up with the increasing transactions, NCache allows you to add multiple servers to accommodate the load. To see how you can add or remove server nodes from the cache cluster at runtime all while staying inside the EKS cluster, check out our documentation on Adding Cache Server in EKS and Removing Cache Servers from EKS.

Let’s Wind It All Up

From this article, you got to experience a step-by-step walkthrough of NCache deployment in an Amazon EKS cluster. The prominent question here is why do you need NCache inside an already complete container environment? Well, let me brief it up real quick for you.

- In-memory solution: NCache boosts up your deployed application’s performance by a noticeable factor.

- Linear scalability: NCache comes in handy when the load on your cache cluster increases.

- Extremely flexibility: NCache dynamically auto rebalances data without any client intervention.

You put all this on top of the features already shipped by AWS EKS and you got yourself a top-notch environment to run your application in. So, what are you waiting for? Deploy NCache in your EKS cluster right now and witness the magic for yourself.