Businesses today are developing high-traffic ASP.NET web applications that serve tens of thousands of concurrent users. Multiple clients have access to cache data in a clustered environment where application servers deploy in a load-balanced environment. In such parallel conditions, several users often try to access and modify the same data and trigger a race condition.

A race condition is when two or more users try to access and change the same shared data simultaneously but end up doing it in the wrong order. This situation leads to a high risk of losing data integrity and consistency. With the advent of in-memory, scalable caching solutions like NCache providing distributed lock mechanisms, enterprises can achieve significantly enhanced data consistency.

NCache Details Locking and Control Docs NCache Docs

Distributed Locking for Data Consistency

NCache provides a mechanism of distributed locking in .NET that allows you to lock specific cache items during concurrent updates. To maintain data consistency in such cases, NCache acts as a distributed lock manager and provides you with two types of locking:

We will discuss them in detail later in the blog. For now, consider the following scenario to understand how without a distributed locking service, there is a violation of data integrity.

Two users access the same Bank Account simultaneously with a balance of 30,000. One user withdraws 15,000, whereas the other user deposits 5,000. If done correctly, the end balance should be 20,000. On the contrary, if a race condition emerges that is not taken care of, the bank balance would be either 15,000 or 35,000, as you can see above.

Here is how this race condition occurs:

- Time t1: User 1 fetches Bank Account with balance = 30,000

- Time t2: User 2 fetches Bank Account with balance = 30,000

- Time t3: User 1 withdraws 15,000 and updates Bank Account balance = 15,000

- Time t4: User 2 deposits 5,000 and updates Bank Account balance = 35,000

In both cases, a code block not catering to managing threads could be disastrous to the bank. So, in the subsequent sections, let’s see how NCache provides locking mechanisms to ensure that your application logic is thread-safe.

NCache Details Pessimistic Locking Optimistic Locking

Optimistic Locking (Item Versions)

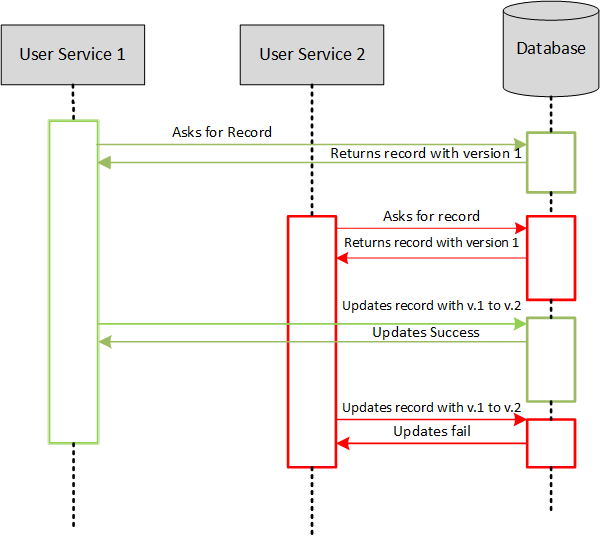

In optimistic locking, NCache uses cache item versioning. On the server side, every cached object has a version number associated with it which gets incremented on every cache item update. NCache then checks if you are working on the latest version. If not, it rejects your cache update. This way, only one user gets to update, and other user updates fail.

You can add an item in the cache using both Add or Insert methods.

- Add method adds a new item in the cache and saves the item version for the first time.

- The insert method overwrites the value of an existing item and updates its item version.

Figure 1: Optimistic Lock Sequence Diagram

Take a look at the following code example.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 |

// Pre-condition: Cache is already connected // An item is added in the cache with itemVersion // Specify the key of the cacheItem string key = "Product:1001"; // Initialize the cacheItemVersion CacheItemVersion version = null; // Get the cacheItem previously added in the cache with the version CacheItem cacheItem = cache.GetCacheItem(key, ref version); // If result is not null if (cacheitem != null) { // CacheItem is retrieved successfully with the version // If result is Product type var prod = new Product(); prod = cacheItem.GetValue(); prod.UnitsInStock++; // Create a new cacheItem with updated value var updateItem = new CacheItem(prod); CacheItemVersion version = cache.Insert(key, updateItem); // If it matches, the insert will be successful, otherwise it will fail } else { // Item could not be retrieved due to outdated CacheItemVersion } |

In the above example, two different applications use a single cache containing the data of products. CacheItem is added to the cache. Both the applications fetch the item with the current version, let’s say version. Application1 modifies the productName and then re-inserts the item in the cache that updates its item version to newVersion. Application2 still has the item with version. If application2 updates the item’s units in stock and re-inserts the item in the cache, item insertion will fail. Application2 will thus have to fetch the updated version to operate on that cacheItem.

NCache Details Optimistic Locking Cache Item Versioning

However, if you wish to fetch an existing item that may have a newer version available in the cache, NCache provides a GetIfNewer method. If you specify the current version as an argument of the method call, the cache returns an appropriate result. If the specific version is less than the one in the cache, the method returns a new Item, else it will return null.

Let’s have a look at the code below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

// Get object from cache var result = cache.GetIfNewer(key, ref version); // Check if updated item is available if (result != null) { // An item with newer version is available if (result is Product) { // Perform operations according to business logic } } else { // No new itemVersion is available } |

The above example adds an item in the cache with the key Product:1001 and item version. If any newer version of the item is available, GetIfNewer method fetches the item using the cache item version.

With optimistic locking, NCache ensures that every write to the distributed cache is consistent with the version each application holds. Please refer to our official NCache Documentation for an extensive code example.

NCache Details GetIfNewer CacheItemVersioning

Pessimistic Locking (Exclusive Locking)

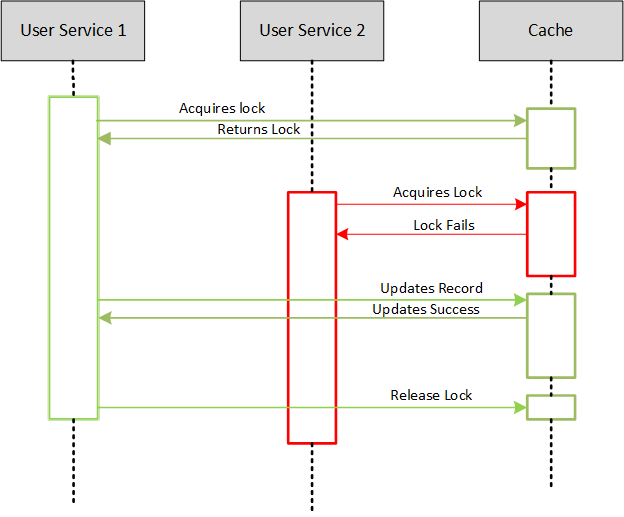

The other way to ensure data consistency is to acquire an exclusive lock on the cached data. This mechanism is called Pessimistic locking . It locks the item using the lock handle, blocking all other users from performing any write operation on that cache item. A LockHandle is a handle associated with every locked item in the cache returned by the locking API.

Figure 2: Pessimistic Lock Sequence Diagram

The following example creates a LockHandle and then locks an item with the key Product:1001 for a time span of 10 seconds so that the item will be unlocked automatically after 10 seconds.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

// Pre-Requisite: Cache is already connected // Item is already added in the cache // Specify the key of the item string key = $"Product:1001"; //Create a new LockHandle LockHandle lockHandle = null; // Specify time span of 10 seconds for which the item remains locked TimeSpan lockSpan = TimeSpan.FromSeconds(10); // Lock the item for a time span of 10 seconds bool lockAcquired = cache.Lock(key, lockSpan, out lockHandle); // Verify if the item is locked successfully if (lockAcquired == true) { // Item has been successfully locked } else { // Key does not exist // Item is already locked with a different LockHandle } |

Upon successfully acquiring the lock while fetching the item, the application can now safely perform operations, knowing that no other application can get or update this item as long as you have this lock. We will call the Insert API with the same lock handle to update the data and release the lock. Doing so will insert the data in the cache and release the lock, all in one call, allowing the cached data to be available for all other applications.

Just remember that you should acquire all locks with a timeout. By default, if the timeout is not specified, NCache will lock the item for an indefinite amount of time, timespan.zero. There may be a case where the item can remain locked forever if the application crashes without releasing the lock. For a workaround, you could forcefully release it, but this practice is ill-advised. Therefore, lock an item for the minimum TimeSpan to avoid deadlock or thread starvation.

NCache Details Pessimistic Locking Using Locking With Cached Data Blog

Failover Support in Distributed Locking

Since NCache is an in-memory, distributed cache, it also provides complete failover support so that there is no data loss and is highly available. In case of a server failure, your client applications keep working seamlessly. Similarly, your locks in the distributed system are also replicated and maintained by the replicating nodes. If any node fails while one of your applications acquires a lock, it will be propagated to a new node automatically with its specified properties, for example, Lock Expiration.

Conclusion

So, which locking mechanism is best for you, optimistic or pessimistic? Well, it depends on your use case and what you want to achieve. Optimistic Locking provides an improved performance benefit over Pessimistic Locking, especially when your applications are read-intensive. Whereas, Pessimistic Locking is safer from a data consistency perspective. Therefore, choose your locking mechanism carefully. For more details, head on to the website. In case of any questions, contact us and let our experts help you out!