As data has been coined as “the new currency”, Apache Lucene has gained traction as a popular full-text search engine, widely used in applications to incorporate flexible text search over significant amounts of textual data. Lucene uses inverted indexing, drastically cutting down the time to find documents related to a particular term.

NCache Details Distributed Lucene NCache Docs

However, it is a stand-alone solution that does not scale as your data grows – you need to rebuild entire Lucene indexes to search data which is an expensive and slow task, becoming a performance bottleneck. While a few Java and REST-based solutions now exist to cater to scalable full-text search, there is still a lack of a scalable full-text searching solution that may naturally fit in the .NET stack.

Using Distributed Lucene with NCache for .NET

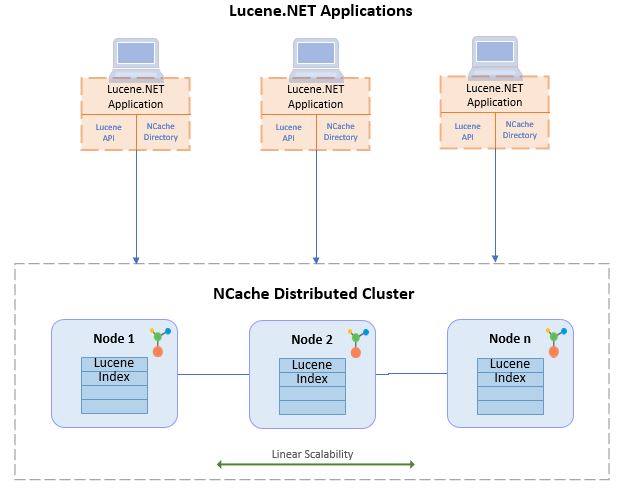

NCache, a powerful and popular .NET In-Memory Datastore, has implemented native Lucene.NET API over its distributed architecture.As it is the standard Lucene.NET API, no code change is required to use it in a scalable manner with NCache.

NCache also utilizes this Lucene.NET to create indexes in a dynamically scalable environment to allow distributed full-text searches. The results of these searches merge before being sent back to your application.

NCache Details Working of Distributed Lucene Lucene Components and Overview

This enhances the stand-alone Lucene into a fast and linearly scalable full-text searching solution.

NCache Details Distributed Lucene for Enterprise Search Distributed Lucene

Using Lucene in .NET Apps

Let us consider an E-commerce site that holds information for thousands of products, orders, and customer details. Hence, indexing all attributes especially non-textual fields (which are not used while searching), is not a wise approach, as it exhausts the cache memory.

For example, our document for a product looks like this:

|

1 2 3 4 5 6 7 8 9 |

{ “ID”: “ABC34”, “Name”: “Tupperware”, “Description”: “Microwaveable, dishwasher-friendly, reusable Tupperware in three sizes”, “RetailPrice”: 15.00, “Discount”: 3.00 } |

Now, we know that our clients perform full-text searches on the product description, which is one field of the document. So, what if we index only those fields that can be searched on and have a key that refers to its corresponding document in the persistence store, e.g. database or file system? This way, once you query for a specific type of product, let’s say “dishwasher-friendly Tupperware”, all products with a description that matches these terms will appear with their ProductID as a document key, and the whole document can then be fetched from the persisted index.

To use distributed Lucene in your existing applications, all you need is to specify NCacheDirectory when opening a directory. This requires the NCache cache name and the index name. The following code snippet opens a directory on a cache LuceneCache in NCache and an index named ProductIndex.

|

1 2 3 4 5 6 7 |

// Specify the cache name and index path for Lucene string cache = "LuceneCache"; string index = "ProductIndex"; // Create directory and open it on the cache Directory directory = NCacheDirectory.Open(cache, index); |

Lucene ships an extensive query language, which interprets a given string into a Lucene query. This can be done either on a term, multiple terms, wildcards, or even fuzzy words. To learn more about Lucene queries, read Lucene Query Docs.

The following code snippet creates an IndexReader on the directory, which is used by the IndexSearcher. The data is analyzed and tokenized on the basis of StandardAnalyzer. The first 50 hits from the result are returned to the application. Note that the analyzer must be the same as the one used during index creation.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

// The 'applyAllDeletes' is true so all enqueued deletes are applied on writer IndexReader reader = DirectoryReader.Open(indexWriter, true); // A searcher is opened to perform searching IndexSearcher indexSearcher = new IndexSearcher(reader); // Specify the searchTerm, fieldName and analyzer string searchTerm = "Beverages"; string fieldName = "Category"; // Note that the analyzer should be same as the one used during index creation Analyzer analyzer = new StandardAnalyzer(LuceneVersion.LUCENE_48); // Create a query parser to parse the query QueryParser parser = new QueryParser(LuceneVersion.LUCENE_48, fieldName, analyzer); Query query = parser.Parse(searchTerm); // Returns the top 50 hits from the result set ScoreDoc[] docsFound = indexSearcher.Search(query, 50).ScoreDocs; reader.Dispose(); |

Load Data to Build Distributed Index

With Lucene, you can build indexes and load data into them as needed. Indexes require an analyzer, that analyzes and tokenizes the data according to your need – it could be whitespace, non-letters, punctuation and so on. Once you create a writer for your Lucene index, you can create documents and add fields to it. This document is then indexed in NCache as a distributed index once you call Commit(). For more detail on Lucene analyzers, have a look at Lucene Analyzer Docs.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

// Specify the cache name and index path for Lucene string cache = "LuceneCache"; string indexPath = "ProductIndex"; // Create directory and open it on the cache Directory directory = NCacheDirectory.Open(cache, indexPath); // The same analyzer is used as for the reader Analyzer analyzer = new StandardAnalyzer(LuceneVersion.LUCENE_48); IndexWriterConfig config = new IndexWriterConfig(LuceneVersion.LUCENE_48, analyzer); // Create indexWriter on NCache directory IndexWriter indexWriter = new IndexWriter(directory, config); Product[] products = FetchProductsToIndex(); foreach (var product in products) { Document doc = new Document { new StoredField("id", product.ID), new TextField("name", product.Name, Field.Store.YES), new TextField("description", product.Description, Field.Store.YES), new StringField("category", product.Category, Field.Store.No), new StoredField("retail_price", product.RetailPrice), }; indexWriter.AddDocument(doc); } indexWriter.Commit(); </code><br /><br /><a href="/ncache/">NCache Details</a> <a href="/resources/docs/ncache/prog-guide/lucene-ncache.html#working-of-distributed-lucene">Working of Distributed Lucene</a> <a href="/blogs/geospatial-indexes-for-distributed-lucene-with-ncache/">GeoSpatial Indexes for Distributed Lucene</a> |

Why NCache for Distributed Lucene?

Using NCache for distributed Lucene provides you with the following benefits:

- Extremely Fast and Linearly Scalable: NCache is an in-memory distributed data store, so building distributed Lucene on top of it provides the same optimum performance for your full-text searches. Moreover, because of NCache’s distributed architecture, the Lucene index is partitioned across all the servers of the cluster. This makes it scalable as you can add more servers on the go as your data load increases, and Lucene indexes are automatically redistributed without any client intervention.

- Data Replication for Reliability and High Availability: With NCache’s Partition-of-Replica topology, the Lucene index is not only partitioned across all servers, but each partition is also replicated to another server of the cluster. Hence, if any server goes down, the replica of the partition serves all the queries for t/resources/docs/ncache/admin-guide/distributed-lucene-counters.htmlhat index, ensuring reliability. Similarly, if a server node goes down, NCache dynamically self-heals by readjusting the data within the remaining nodes, without any downtime or impact to your Lucene index, ensuring high availability.

Conclusion

To sum it up, full-text searching has now become fundamental in almost every business, owing to the powerful search engine Lucene. But as data grows, rebuilding indexes can cause more damage than gain and this where an in-memory, distributed .NET solution such as NCache steps in. All it requires is a one-line code change in your existing Lucene application, and voila, you have the best of both worlds as an in-memory, distributed full-text search mechanism.