Development, deployment, and management of applications have become easier with containerization, which is why cloud deployment is becoming increasingly popular. Azure, being the best in the business, provides with its fast and user-friendly Kubernetes deployment, in the form of Azure Kubernetes Service (AKS).

To improve the application’s performance in an Azure Kubernetes environment, consider deploying NCache within the AKS cluster. As such, it is an in-memory distributed caching solution that boosts your application’s performance as your cache is closer to the application. Its distributed nature allows you to add as many servers as needed to improve latency, thus inducing extreme scalability in AKS.

NCache Deployment Architecture in Azure Kubernetes Service

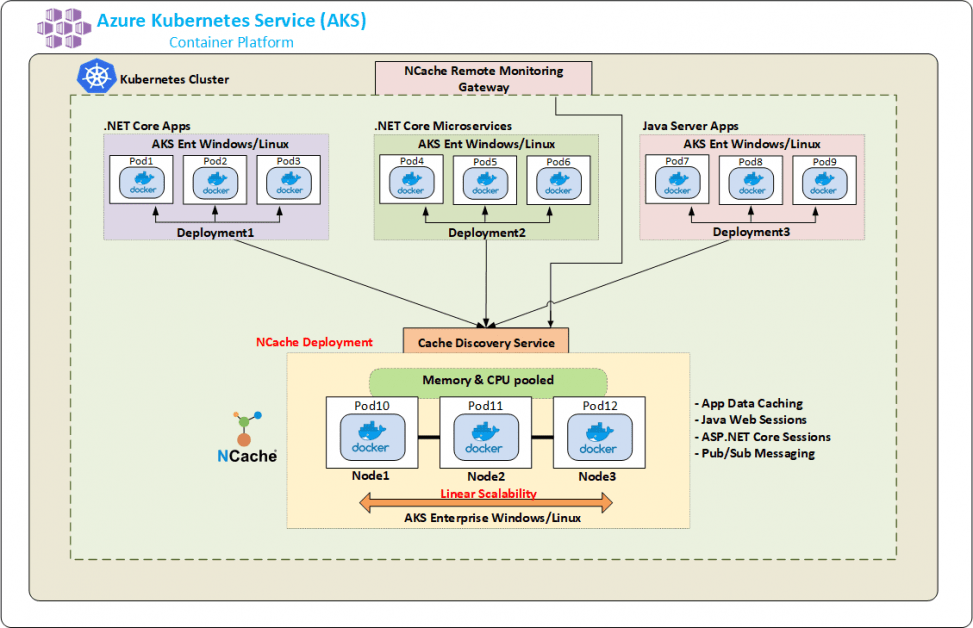

The overall layout of NCache’s deployment in Azure Kubernetes Service is as follows:

- Applications: These are connected to a headless Cache Discovery service. This service is responsible for allowing clients access to the cluster pods that are running the cache service.

- Gateway Service: Provides a load balancer to bring the traffic down to specific pods based on the provided client IP.

- Pod: Serves as the basic unit for building services, ensuring all containers are on the same host. It contains one or more containers that share resources like RAM, CPU, and network, though it’s best to have one container per pod.

The flow of requests and the structure of an AKS cluster with NCache deployed are shown in the diagram below.

Figure 1: NCache Deployment in Azure Kubernetes Service

To start using extensive built-in features provided by NCache in your Azure Kubernetes Service cluster, you need to deploy NCache and the required services in an AKS setup. The steps provided below will help you get started in deploying and using NCache in the Azure Kubernetes cluster.

Step 1: Create NCache Deployment

In Azure Kubernetes Service, whenever we talk about deploying an application or service, we need to create a YAML file. This YAML file contains all the information required to create a pod inside your AKS cluster. Let me show you what your YAML file should look like to successfully create a pod that contains the NCache service.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

kind: Deployment apiVersion: apps/v1beta1 # underlying Kubernetes version metadata: name: ncache labels: app: ncache spec: replicas: 2 template: metadata: labels: app: ncache spec: nodeSelector: "beta.kubernetes.io/os": linux containers: - name: ncache image: docker.io/alachisoft/ncache:enterprise-server-linux-5.0.2 ports: - name: cache-mgmt-tcp # for tcp communication containerPort: 8250 - name: cache-mgmt-http # for http communication containerPort: 8251 ... # remaining necessary ports |

To ensure the cluster recognizes what you are creating as a deployment pod, you need to mention “kind” as Deployment. What you need to be wary of here is the underlying version of Kubernetes under the “apiVersion” tag. Kubernetes frequently updates this version number, so ensure it matches your Kubernetes deployment when deploying NCache.

The number of “replicas” here indicates the number of pods for this deployment, which is set to 2 in this case, but you can change this value as per your requirement. Under the “containers” tag, you provide the path to the NCache Enterprise Server Docker image, which is available on Docker Hub.

Some of the other requirements that you need to know to deploy NCache in the Azure Kubernetes cluster are port information. For clients to successfully interact with NCache servers, you need to specify the container port number in your YAML file. Mainly, these are the basic requirements that you need to understand to successfully deploy NCache in an AKS cluster. Once this YAML file is created, you use this file to create pods in AKS. Creating this YAML file is all that you have to do to successfully deploy NCache in an AKS cluster. Run the following command in Azure Cloud Shell and voila! Your NCache deployment is now a full-fledged running pod in an Azure Kubernetes Service!

|

1 |

kubectl create -f [dir]/ncache.yaml |

Step 2: Create NCache Discovery Service

Outside the Kubernetes cluster, connecting cache clients with cache servers is quite understandable as clients need the IP addresses of the cache servers. These IP addresses are static and known to every client that is a part of that system. But when you take the same elements and put them inside the Kubernetes environment, the implementation changes. Inside a Kubernetes cluster, every deployment pod is assigned a dynamic IP address at runtime which is unknown to the client applications. This implementation gets in the way of your client applications identifying NCache servers to achieve performance and scalability.

To counter this issue, Kubernetes allows you to create a Service that has a fixed IP instead of a dynamic one. So, to utilize this, you need to create a headless discovery service that allows your client application to effortlessly access the pod on which NCache service is running. The information provided in this service, as a YAML file lets all the client applications to connect to this service. This service is then responsible for assigning one server to every client connection request; all while staying inside the AKS cluster. So, without further ado, let us start creating the YAML file ready for deployment.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

kind: Service apiVersion: v1 # underlying Kubernetes version metadata: name: cacheserver labels: app: cacheserver spec: clusterIP: None selector: app: ncache # same label as provided in the ncache YAML file ports: - name: management-tcp port: 8250 targetPort: 8250 - name: client-port port: 9800 targetPort: 9800 |

The “kind” you have specified needs to be a service with the “apiVerison” set to the underlying version of Kubernetes. To make this a headless service, you need to set the “clusterIP” tag to none which specifies that your discovery service will not have any public IP assigned to it. The rest are the ports required for NCache clients to communicate with the NCache servers.

From here, you go to Azure Cloud Shell and run the provided command to have a fully functional running headless discovery service inside your Kubernetes cluster.

|

1 |

kubectl create -f [dir]/discoveryservice.yaml |

Step 3: Create NCache Gateway Service

Inside an AKS cluster, whatever happens is confined to the cluster. For you to use NCache from your local machine, there needs to be a way through which NCache management operations can be performed within that cluster. This is exactly why we created a Gateway service- responsible for accessing, managing, and monitoring NCache from outside the Azure Kubernetes Service.

Now, to use this functionality in the cluster, you need a running pod. To create one, you need to create a YAML file containing all the necessary tags and values. So, let’s start by writing a YAML file for creating a gateway service for NCache management.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

kind: Service apiVersion: v1 # underlying Kubernetes version metadata: name: gateway spec: selector: app: ncache # same label as provided in the ncache YAML file type: LoadBalancer sessionAffinity: ClientIP ports: - name: management-http port: 8251 targetPort: 8251 |

Here, for your Azure Kubernetes Service cluster to know that this pod will act as a service for a particular purpose, instead of deploying anything, you need to state the “kind” as a service. This file should also mention the ports a gateway service needs, to function error-free. Tag “type” as LoadBalancer states that this gateway service will be an external load balancer that balances clients’ requests on multiple servers. You need to ensure that the “sessionAffinity” is set to ClientIP to make sure that one client gets redirected to the same server every time.

This is pretty much all the information you need to create a gateway service for your NCache deployment. What you need to do now is run the following create command from the Azure Shell and AKS will create and start this service for you.

|

1 |

kubectl create -f [dir]/gatewayservice.yaml |

Step 4: Create a Cache Cluster

At this point, you have working NCache servers, a gateway service, and a discovery service for NCache clients. To fully enjoy NCache in your Azure Kubernetes Service, create a cache cluster inside your Kubernetes cluster.

You can carry out this step using the NCache Management Center that comes integrated with the NCache deployment. To learn more about it, refer to the NCache docs on Create Clustered Cache. The only twist in this step is the IPs of the servers that you need. These have to be the same IPs that the Kubernetes cluster has assigned to your cache pods. You can get these IPs by executing the get pods command in Azure Cloud Shell.

Step 5: Create Application Deployments

To deploy and run client applications (be that .NET or Java) in your cluster, you need to create a YAML file. Your client deployment YAML file should be something like this:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

kind: Deployment apiVersion: apps/v1beta1 # Underlying Kubernetes version metadata: name: client spec: replicas: 1 template: metadata: labels: app: client spec: imagePullSecrets: - name: client-private nodeSelector: "beta.kubernetes.io/os": linux containers: - name: client image: # Your docker client image here ports: - name: port1 containerPort: 8250 - name: port2 containerPort: 9800 |

The “nodeSelector” mentioned in the file could be windows as Kubernetes supports both operating systems. Plus, you have the edge of deploying multiple client applications inside the same cluster depending on your requirements. For each client application, you need to create a similar YAML file so that every application runs on a separate pod.

Run the following command in the Cloud Shell provided by Microsoft Azure to successfully create and start your client application pod.

|

1 |

kubectl create -f [dir]/client.yaml |

The provided NCache client is extremely intelligent when it comes to creating connections within the cluster. All this client requires is the name of the service that it needs to talk to for it to automatically discover all the underlying NCache cluster nodes for a given cache present inside your Azure Kubernetes cluster.

The most feasible advantage of using NCache in AKS is that you do not need to provide IP addresses of the cache pods for client connection. The headless discovery service you created before is responsible for providing IP addresses of the cache pods to your client application at runtime.

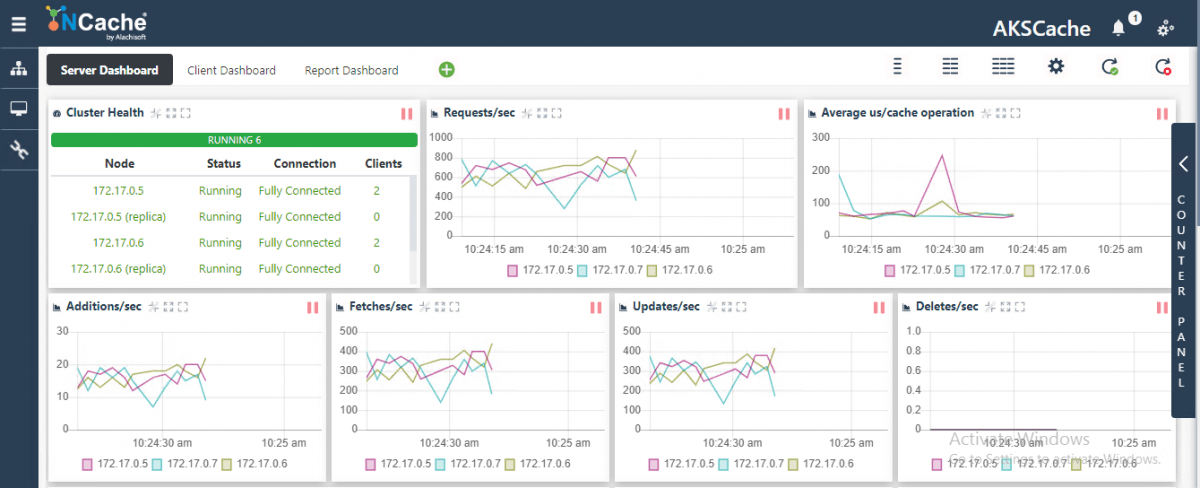

Step 6: Monitor NCache Cluster

Now that you have got your services, servers, and application up and running, you need a way to monitor cache activity inside the cluster. For this exact reason, NCache comes packed with various tools to help you monitor your cache cluster. These tools help you get a better idea about your cluster’s health, performance, network glitches, and connectivity.

NCache provides an NCache Monitor that graphically shows the real-time performance of your cache.

Similarly, you have a Cache Statistics option that provides a more detailed analysis of your cache activity.

Step 7: Scaling NCache Cluster

NCache, being an extremely scalable distributed cache, allows you to add and remove server nodes at runtime to enhance the overall performance of NCache. While monitoring your cluster, if you feel like the requests/sec are far greater than the number of servers available to entertain those requests, you can add one or multiple cache nodes inside your deployment.

There are multiple ways through which you can scale the NCache cluster in your AKS deployment. You can use the NCache Management Center, NCache PowerShell tool, or even the NCache YAML file. To know more about how these methods are used to add and remove nodes from the cluster, visit our documentation on Adding Cache Servers in an AKS Cluster and Removing Cache Servers from an AKS Cluster.

Conclusion

NCache is a highly scalable in-memory distributed cache that significantly boosts performance, making it a perfect fit for applications running in Azure Kubernetes Service (AKS). As we’ve discussed, AKS is a fully managed container orchestrator that automates tasks like upgrades and patching. By deploying NCache within your AKS cluster, you can achieve the scalability and high availability your applications need. So why wait? Download NCache today and unlock these powerful advantages!