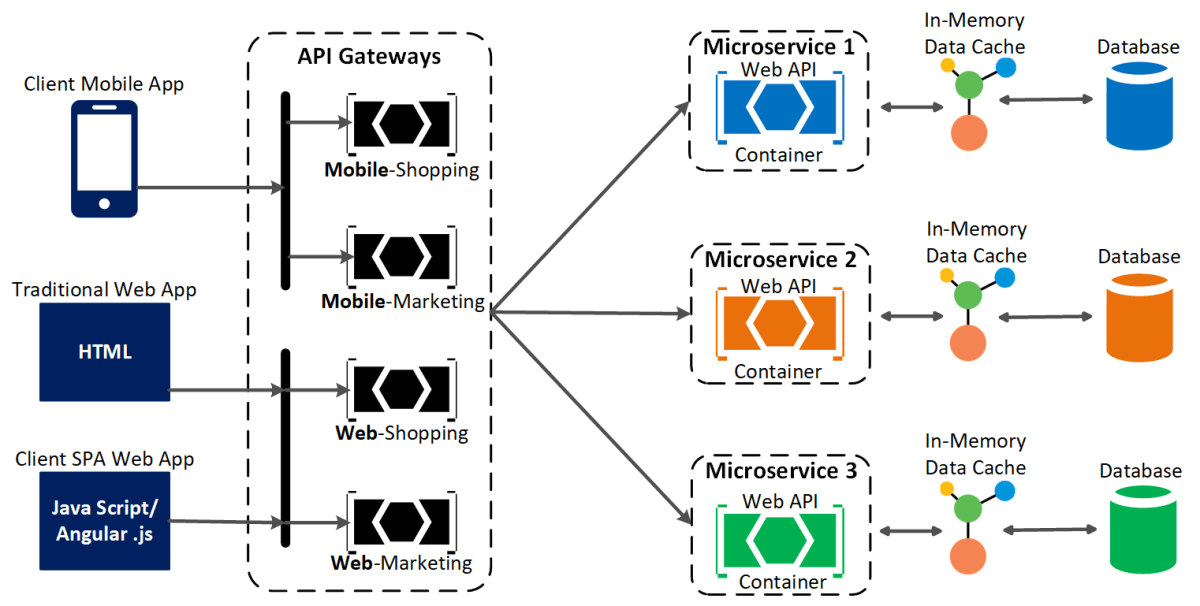

Modern applications require exceptional performance, scalability, and responsiveness. While microservices architecture facilitates modular development, it also presents challenges such as increased latency and database load. One effective method to address these challenges is the implementation of distributed caching.

Distributed caching helps minimize database queries, improves API response times, and guarantees high availability. This document explores how caching can boost microservices performance, with a particular focus on NCache as a viable caching solution.

Figure: NCache as Data Cache in Microservices

Understanding Microservices Architecture

Microservices architecture divides applications into distinct services that interact via APIs, enhancing scalability and maintainability. However, they also present challenges like latency and data consistency.

- Core Principles of Microservices

-

- Independence: Each service functions independently and scales separately.

- Resilience: The use of circuit breakers and retries enhances fault tolerance.

- Stateless Communication: Services do not retain session state information.

- API-Driven: Communication between services is facilitated through REST or gRPC.

- Key Components of Microservices

-

- API Gateway: Oversees authentication and traffic routing.

- Service Discovery: Enables microservices to locate one another dynamically.

- Containerization: Docker provides uniform deployment environments.

- Observability: Enhanced logging and monitoring offer better system insights.

- Security: Protocols like OAuth 2.0 and JWT ensure secure communication.

While microservices can add complexity, implementing distributed caching mitigates performance issues by reducing database dependencies and enhancing response times.

Where to Use Distributed Cache?

Distributed caching optimizes microservices by alleviating database load and enhancing response times. Important applications include:

- API Gateway Caching: Caches frequent API responses to decrease backend traffic.

- Database Query Caching: Reduces database demand by retaining frequently retrieved query results.

- Session Management: Effectively maintains user sessions in stateless applications.

- Event-Driven Caching: Ensures cached data remains updated with event-driven changes.

- Pub/Sub Messaging: Facilitates real-time interactions among services.

Why Choose NCache?

NCache is a high-performance, open-source caching solution specifically designed for .NET applications. It significantly enhances microservices by minimizing database dependency, accelerating response times, and ensuring scalability.

- Optimized Performance: Caches frequently accessed data, leading to better response times and fewer database queries.

- Reduced Database Load: Offloads read-heavy operations, enabling databases to focus on critical transactions.

- Scalability & High Availability: Facilitates clustering and replication for uninterrupted operations.

- Seamless .NET Integration: Tailored for .NET applications, ensuring seamless compatibility.

- Advanced Caching: Offers Read-through, Write-through, Write-behind, Cache Loader & Refresher, as well as support for SQL/LINQ queries, Groups, and Tags for flexible and efficient caching strategies.

- Pub/Sub Messaging: Enables efficient inter-service communication through Pub/Sub messaging.

Integrating NCache in .NET Microservices

- Installing NCache

Install the required NCache SDK package using the following command:

- Configuring NCache in Program.cs

After installation, set up NCache in your Program.cs file to enable cache connectivity and specify caching policies:

- Implementing Caching with Expiration in a Microservice

Implement caching with expiration policies to automatically remove outdated data, thereby maintaining optimal performance in your microservices:

- Using SQL Query on the Cache

NCache allows executing SQL-like queries directly on the cache, allowing efficient data retrieval while minimizing database access:

Deploying Microservices with NCache

- Containerizing with Docker

Containerization allows microservices to be packaged with all dependencies, ensuring consistency across different environments:

- Building and Running the Docker Container

After defining the Dockerfile, build and run the container using the following commands:

Conclusion

NCache significantly improves microservices by alleviating database load, improving response times, and ensuring scalability. By integrating NCache, you can have benefits like enhanced API performance, optimized database usage, scalable and robust service. Implementing distributed caching with NCache guarantees that your .NET microservices operate efficiently and are well-prepared for high-demand scenarios.