A distributed cache is essential for any application that demands high performance during extreme transaction loads. In-memory distributed caching outperforms traditional databases and offers linear scalability by allowing you to easily add more servers to the cache cluster, something databases struggle with. However, one challenge remains: distributed caches are often hosted on remote servers, meaning your application has to make network trips to fetch any data. And, this is not as fast as accessing data locally, especially from within the application process. This is where the client cache comes in handy.

In NCache, a client cache maintains a connection to the distributed cache cluster and receives event notifications when the data it holds is updated. This cluster tracks data locations within this cache, ensuring notifications are sent only to the relevant near cache, avoiding unnecessary broadcasts, and enhancing efficiency.

How Does Client Cache Work?

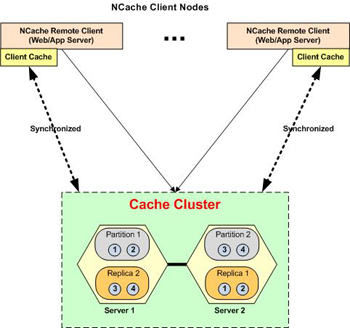

A client cache is essentially a local cache on your web/application server, but it is aware of the distributed cache and its connection. Additionally, it can either be in-process (within your application) or out-of-process (outside your application). This allows it to deliver much faster read performance than even a distributed cache while ensuring that its data is always synchronized with the distributed cache.

Figure: Diagram depicting the working of the client cache

However, a distributed cache notifies the client cache asynchronously after successfully updating data in the distributed cache cluster. Technically, this means there is a small window of time (in milliseconds) during which some data in it might be outdated as compared to the distributed cache. Now, in most cases, this is perfectly acceptable to applications. But, in some cases, applications demand 100% accuracy of data.

So, to handle such situations, NCache provides a pessimistic synchronization model. In this model, every time the application tries to fetch anything from the client cache, the client cache first checks whether the distributed cache has a newer version of the same cached item. If it does, then it fetches a newer version from the distributed cache. Now, this trip to the distributed cache has its cost, but still, it remains faster than retrieving the entire cached item directly from the distributed cache.

When to Use It?

Now, the main question that comes to mind is when to use a client cache and when not to use it. Well, the answer is pretty straightforward. If your application(s) performs more reads than writes, it is preferred, especially if the same items are accessed frequently.

If your application(s) performs many updates (or at least as many as the reads), it might not be ideal because it can slow down the update process by requiring updates to both the client cache and the distributed cache.

Conclusion

NCache empowers you to enhance your application’s performance by leveraging a client cache alongside a distributed cache. Experience the benefits firsthand by downloading NCache Enterprise. Try it out today and see the difference it can make.